Part 1: Foundations of Cryptography

Part 2: Classical Ciphers

Part 3: Mechanized Cryptography

Part 4: Theoretical Breakthroughs

Part 5: Modern Symmetric Cryptography

Part 6: Principles of Good Cryptosystems

Part 7: Introduction to Cryptanalysis

Introduction

Before modern computers, cryptography relied on manual techniques. These are now grouped under the label classical ciphers. They form the vocabulary of basic operations that reappear in later, stronger systems.

Most classical ciphers fall into two categories: substitution ciphers replace symbols in the message with other symbols, while transposition ciphers rearrange the order of symbols.

Both methods hide the original message, but both are limited in strength. Studying why they fail sets the stage for mechanized cryptography and, later, mathematically grounded designs.

Early Substitution Ciphers

Few ciphers from antiquity were documented: secrecy of the algorithm was considered crucial. A lot of early communications also relied on hiding the existence of the message rather than encrypting it, a process called steganography, which we will cover in a future lecture.

Atbash (c. 600 BCE)

One of the earliest recorded ciphers is Atbash, found in Hebrew texts. It takes the 22-letter Hebrew alphabet and maps the first letter to the last, the second to the second-to-last, and so on. Thus aleph (א) becomes tav (ת), bet (ב) becomes shin (ש), gimel (ג) becomes resh (ר). The name "Atbash" itself comes from this mapping: aleph–tav and bet–shin.

In English, the same idea maps A→Z, B→Y, C→X, etc. For example:

| Original: | A B C D E F G H I J K L M N O P Q R S T U V W X Y Z |

| Substitution: | Z Y X W V U T S R Q P O N M L K J I H G F E D C B A |

Plaintext: MY CAT HAS FLEAS

Ciphertext: NB XZG SZH UOVZH

Atbash requires no key and is trivial to break, but it illustrates the earliest form of substitution.

Indian Mlecchita Vikalpa (c. 400 BCE – 200 CE)

The Kama Sutra lists Mlecchita Vikalpa ("obscure speech") as one of the 64 arts of a cultured person. The text does not spell out a method, but historical commentary suggests it referred to simple substitution or phonetic transformations for secret communication. Kautilya's Arthashastra (c. 3rd century BCE) contains more detail, describing substitutions where vowels and consonants could be interchanged. These references show that secret writing was part of Indian culture long before modern ciphers.

Caesar Cipher (shift cipher) (c. 60 BCE)

Julius Caesar used a substitution cipher for military correspondence. Each letter was shifted in the alphabet by a fixed number, usually three. Both parties have to agree on the direction of the shift and the amount of spaces to shift.

For example, with a shift of 3:

| Original: | A B C D E F G H I J K L M N O P Q R S T U V W X Y Z |

| Substitution: | D E F G H I J K L M N O P Q R S T U V W X Y Z A B C |

Plaintext: MY CAT HAS FLEAS

Ciphertext: PB FDW KDV IOHDV

This method was effective in Caesar's time when literacy itself was rare. By modern standards, it is trivial to break: there are only 25 possible shifts with the English alphabet, all easy to test. Note that Caesar did not encode spaces since spacing between words did not exist until the 7th century CE. A lot of encryption even into the 20th century continued to omit spaces or use the letter 'X' to denote them.

Monoalphabetic Substitution

A generalization of the Caesar cipher is to allow an arbitrary mapping of the alphabet. This produces 26! possible keys, which is astronomically large.

At first glance, that looks secure but the method still leaks information.

The Caesar cipher and other simple substitution methods share a fatal flaw: they preserve the statistical patterns of the underlying language. This weakness led to one of the most important breakthroughs in the history of cryptanalysis.

The First Major Breakthrough in Cryptanalysis

In English, letters appear with very different frequencies. The letter e is by far the most common, making up about 12–13% of typical text. Next come t (around 9%), a (8%), o (7%), and i and n (around 7% each). Rare letters like q, x, and z appear less than 0.2% of the time.

| Letter | Frequency |

|---|---|

| E | 12.7% |

| T | 9.1% |

| A | 8.2% |

| O | 7.5% |

| I | 7.0% |

| X | 0.15% |

| Q | 0.095% |

| Z | 0.074% |

Common two-letter combinations (digrams) such as TH, HE, IN, and ER also appear frequently and in predictable patterns.

Frequency Analysis: The Statistical Attack

Imagine we intercept a long ciphertext and notice that the symbol "Q" appears 13% of the time, far more than any other symbol. It's reasonable to guess that "Q" encodes "e." If the pair "QJ" shows up often, it may represent "th." Once a few letters are identified, patterns emerge and the rest of the cipher falls quickly.

This process is called frequency analysis, first formalized in the 9th century by the Arab scholar Abu Yusuf Al-Kindi. What seemed like an impossibly large key space collapses under statistical attack because the redundancy of natural language shines through.

Why This Attack Works

The fundamental problem is that simple substitution ciphers are monoalphabetic: each plaintext letter always maps to the same ciphertext letter. This preserves the statistical fingerprint of the original language. No matter how the letters are scrambled, the underlying patterns shine through.

What seemed like an impossibly large key space (26! possible letter mappings) collapses under a statistical attack because the redundancy of natural language remains visible in the ciphertext.

The Need for Random-Looking Ciphertext

This breakthrough revealed a fundamental goal: strong ciphertext should look statistically random. Instead of preserving the uneven frequency distribution of English, secure encryption should produce output where all symbols appear roughly equally often, with no predictable patterns or structures.

English text is highly predictable: much of each character represents redundancy rather than true information. A cipher that merely relabels the symbols without eliminating this redundancy leaves the statistical fingerprints that enable frequency analysis. This realization would later be formalized in information theory, but the core insight emerged from these early cryptanalytic successes.

Any cipher that preserves the statistical structure of the plaintext will eventually succumb to analysis. This realization drove cryptographers to seek methods that would obscure or eliminate these telltale patterns.

Attempts to Hide Frequency: Polyalphabetic Substitution

Recognizing the weakness of monoalphabetic substitution, designers began varying the cipher alphabet during encryption. If the same plaintext letter could encrypt to different ciphertext letters depending on its position, frequency analysis would be much harder.

Alberti's Cipher Disk (1466)

In the 15th century, the Italian Renaissance polymath Leon Battista Alberti created what is often regarded as the first practical polyalphabetic cipher. Alberti wasn't just a cryptographer but also an architect, painter, musician, and author. He served in the papal court and understood firsthand the need for stronger ciphers to protect diplomatic correspondence in Renaissance Italy.

Alberti's invention was a pair of concentric metal disks that could rotate independently. Each disk was inscribed with the alphabet around its edge. The larger, fixed disk carried the plaintext alphabet; the smaller, movable disk carried a scrambled ciphertext alphabet. By aligning one letter on the inner disk with one letter on the outer disk, the user set a substitution alphabet.

One limitation was that both parties needed to possess identical cipher disks—the security depended on having the same physical device with the same alphabet arrangements.

How encryption worked

-

The sender and receiver agreed on a starting alignment. For example, outer disk A lined up with inner disk G.

-

The sender would then encrypt a segment of plaintext using this mapping. In this disc configuration, plaintext R (outer disk) maps to inner M, so R→M. Plaintext P maps to S on the inner disk in this example.

-

After some fixed number of characters (or a set number of words), the sender rotated the inner disk to a new position. This changed the substitution alphabet, so the same plaintext letter could encrypt to a different ciphertext letter later in the message.

Why the Alberti cipher was important

By changing the alphabet during the message, Alberti introduced the principle of polyalphabetic substitution: one plaintext symbol can map to many ciphertext symbols depending on position. This innovation broke the one-to-one relationship between plaintext and ciphertext that made monoalphabetic substitution so easy to attack.

Alberti even suggested changing the disk alignment at irregular intervals, not just after a fixed number of characters, to further confuse attackers. That idea — varying the substitution alphabet dynamically — was revolutionary. While later systems like the Vigenère cipher became better known, Alberti's disk was the conceptual breakthrough that inspired them.

The Vigenère Cipher (16th century)

The best-known polyalphabetic system is the Vigenère cipher, described in the 16th century. Despite the name, Blaise de Vigenère did not invent it. An Italian cryptographer, Giovan Battista Bellaso, published the method in 1553. Vigenère, a French diplomat, described it later and added improvements, but his name stuck.

The method uses a keyword that repeats across the plaintext. Each letter of the keyword specifies a Caesar shift for the corresponding plaintext letter. If the keyword is FACE, then the first letter is shifted by F (5), the second by A (0), the third by C (2), the fourth by E (4), and then the sequence repeats.

Example:

Plaintext: MY CAT HAS FLEAS

Keyword: FA CEF ACE FACEF

Ciphertext: RY EEY HCW KLGEX

Each occurrence of the same plaintext letter may encrypt differently depending on which keyword letter aligns with it. That makes simple frequency analysis ineffective.

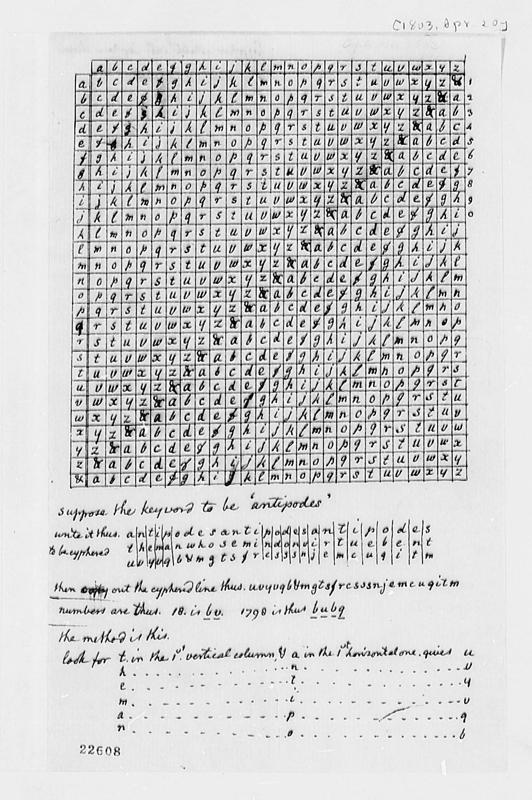

In practice, encryption and decryption were done using a printed lookup table called the Vigenère square or tabula recta. This was a 26×26 grid where each row represented a Caesar cipher with a different shift. The keyword letter identified the row (y-axis), the plaintext letter identified the column (x-axis), and their intersection contained the ciphertext letter.

A B C D E F G H I J K L M N O P Q R S T U V W X Y Z

____________________________________________________

A | A B C D E F G H I J K L M N O P Q R S T U V W X Y Z

B | B C D E F G H I J K L M N O P Q R S T U V W X Y Z A

C | C D E F G H I J K L M N O P Q R S T U V W X Y Z A B

D | D E F G H I J K L M N O P Q R S T U V W X Y Z A B C

...

T | T U V W X Y Z A B C D E F G H I J K L M N O P Q R S

U | U V W X Y Z A B C D E F G H I J K L M N O P Q R S T

...

Z | Z A B C D E F G H I J K L M N O P Q R S T U V W X YTo encrypt, find the plaintext letter in the top row and the keyword letter in the left column. The cell at their intersection is the ciphertext. To decrypt, find the keyword letter row, locate the ciphertext letter in that row, and read the plaintext from the top column. This avoided mental arithmetic and made the cipher practical with pen and paper.

Why it seemed unbreakable

For centuries, the Vigenère cipher was called "le chiffre indéchiffrable"—the unbreakable cipher. By disguising single-letter frequencies and spreading them across multiple alphabets, it appeared immune to the classical techniques that worked on Caesar and monoalphabetic substitutions.

How it was broken

In the 19th century, Charles Babbage in England and Friedrich Kasiski in Prussia showed how to break it. Repeated segments of ciphertext often came from the same repeated plaintext encrypted under the same part of the keyword. By measuring the distances between repeated patterns, an analyst could guess the keyword length. Once that length was known, the ciphertext could be split into that many streams—each one a Caesar cipher. Frequency analysis could then solve each stream.

Example of the attack

Suppose the keyword is CAT (length 3) and the plaintext is long. Whenever the same three-letter sequence of plaintext appears aligned with the keyword, it produces the same ciphertext.

Take the plaintext ATTACKATDAWN with the keyword CAT (C=2, A=0, T=19).

We repeat the keyword until it covers the plaintext:

Plaintext: A T T A C K A T D A W N

Keyword: C A T C A T C A T C A TEncrypt by shifting each letter forward by the keyword value (mod 26), or looking it up in the table:

A+2=C, T+0=T, T+19=M, A+2=C, C+0=C, K+19=D, A+2=C, T+0=T, D+19=W, A+2=C, W+0=W, N+19=G

Ciphertext = CTMCCDCTWCWG

Now suppose this ciphertext is part of a longer intercepted message, and the sequence CT occurs in multiple places. By measuring the distances between repeats, the analyst finds they are often multiples of 3. That points directly to a key length of 3. Once that is guessed, the ciphertext is split into three streams:

-

Stream 1: positions 1, 4, 7, 10 … → Caesar cipher with shift 2 (C)

-

Stream 2: positions 2, 5, 8, 11 … → Caesar cipher with shift 0 (A)

-

Stream 3: positions 3, 6, 9, 12 … → Caesar cipher with shift 19 (T)

Each stream can be cracked independently with frequency analysis, revealing the keyword.

This example shows how repetitions can disclose the keyword length. With longer texts, the evidence piles up quickly, and what seemed "unbreakable" collapses under systematic analysis.

Lessons

The Vigenère cipher represents both a high point of classical cryptography and a warning. For centuries, it was thought unbreakable, but eventually it fell to systematic analysis. Its history illustrates a recurring theme: what seems unbreakable at first often falls once analysts find patterns to exploit.

Transposition: Scrambling Order Instead of Letters

While substitution ciphers change what letters appear, transposition ciphers keep the original letters but rearrange their positions. These methods don't hide letter frequencies, but they do disrupt the normal patterns and sequences that make text recognizable.

Scytale (5th century BCE)

The scytale was used by Spartans around the 5th century BCE. In wartime, commanders needed to send instructions that could not be read if intercepted. The scytale was a simple but clever solution.

The device consisted of a wooden staff (the scytale) of fixed diameter. A strip of leather or parchment was wound tightly around the staff, and the message was written across it row by row. When unwound, the letters appeared scrambled. To read it, the recipient needed a staff of the same diameter. The “key” to the cipher was the diameter of the staff.

Example

Let's look at a staff diameter that allows 4 letters per row.

Plaintext: MEET AT THE TEMPLE

Written on the staff produces:

M E E T

A T T H

E T E M

P L E XWhen the strip of writing is unwound, we see: MAETP ETTLE ETEHM THMEX

Because the plaintext must fit evenly into the grid, extra letters are often added as padding, typically X's at the end, which can introduce small but exploitable patterns. Even in modern ciphers, padding is often used when plaintext must be a multiple of a certain size. It is added before encryption and removed afterwards.

This provided minimal security: trying different diameters would quickly reveal the plaintext, but it demonstrated an early recognition that rearranging letters could hide the meaning of a message.

Columnar Transposition (17th century)

A more sophisticated transposition method appeared in Europe and remained in use into the 20th century. Known as columnar transposition, it rearranged letters by writing the message in rows under a keyword, then reading the columns in an order determined by the alphabetical sequence of the keyword letters.

How it works

-

Write the keyword and number its letters alphabetically

-

Write the plaintext in rows under the keyword

-

Read columns in the order specified by the numbers

Example

Keyword: ZEBRA → numbered as 5-3-2-4-1

Z E B R A

5 3 2 4 1

M E E T A

T T H E M

P L E X XRead columns in order 1-2-3-4-5:

Column 1 (A): AMX

Column 2 (B): EHE

Column 3 (E): ETL

Column 4 (R): TEX

Column 5 (Z): MTP

Ciphertext: AMXEHEETLTEXMTP

Cryptanalysis of Transposition

A columnar transposition preserves single-letter frequencies. If the ciphertext is long enough, an analyst can guess the number of columns (the keyword length) by trying different widths. The ciphertext is written into rows of that width, and the analyst looks at the resulting columns. If the guess is correct, patterns such as common digrams (TH, ER, AN) may appear vertically, giving clues to the right arrangement.

By permuting columns and checking for plausible English fragments, cryptanalysts can eventually reconstruct the keyword order. This is slow by hand but feasible against long messages, which is why columnar transposition was never fully secure.

Attack method:

-

Guess the number of columns by trying different widths

-

Look for common digrams (TH, ER, AN) appearing vertically

-

Permute column orders to find arrangements that produce readable fragments

-

Use common words and patterns to confirm the correct arrangement

Weaknesses:

-

Letter frequency analysis immediately reveals the language

-

Common letter pairs and patterns eventually appear vertically or diagonally

-

With enough text, trying different column widths and arrangements reveals patterns

Strengths:

-

Simple frequency analysis doesn't directly reveal the plaintext

-

The scrambling disrupts word boundaries and common sequences

-

Multiple transposition passes can create quite complex rearrangements

Why Transposition Alone Wasn't Enough

Transposition ciphers like the scytale and columnar transposition do not disguise which letters appear, but they disrupt their order. This makes analysis harder but not impossible. Once analysts have enough text, the patterns can be reconstructed. The real strength of these methods came when combined with substitution, as in later systems like Playfair and ADFGVX.

Combined Systems: Mixing Substitution and Transposition

Recognizing that neither substitution nor transposition alone provided adequate security, some designers experimented with combinations that would cover each other's weaknesses.

Playfair Cipher (1854)

The Playfair cipher was invented by Charles Wheatstone but promoted by Lord Playfair. Unlike previous systems, it encrypted pairs of letters instead of single letters, which helped obscure single-letter frequencies.

How it works

-

Choose a keyword and write it into a 5×5 grid, filling in the remaining letters of the alphabet afterward (I and J are usually combined)..

Example keyword:MONARCHY

M O N A R C H Y B D E F G I/J K L P Q S T U V W X Z -

Break the plaintext into letter pairs, adding padding '

X' if needed:

MEET AT DAWN→ME ET AT DA WN -

Encrypt each pair of letters by applying rules based on the positions of the pair in the grid:

-

If both letters are in the same row, replace each with the letter to the right (wrapping)

-

If both letters are in the same column, replace each with the letter below (wrapping)

-

Otherwise, form a rectangle and replace each letter with the one in the same row but in the other column

Plaintext pair ME (same column) → CL

Plaintext pair ET (rectangle) → KL

Strength: By working with digrams, Playfair eliminated obvious single-letter statistics and required analysis of two-letter frequencies.

Weakness: English digrams are not random:TH, HE, IN, ER are much more common than others. With sufficient ciphertext, frequency analysis of letter pairs could break the cipher.

ADFGVX Cipher (1918)

The ADFGVX cipher was Germany’s most sophisticated field cipher of World War I. It combined substitution with transposition in a two-step process. The name comes from the six letters used in the ciphertext: A, D, F, G, V, and X. These were chosen because they sound distinct when transmitted by telegraph.

How it works

1. Start with a 6×6 Polybius square filled with a scrambled set of 26 letters and 10 digits. Rows and columns are labeled with A, D, F, G, V, X.

A D F G V X

A | P H 0 Q G 6

D | 4 M E A 1 Y

F | L 2 N O F D

G | X K R 3 C V

V | S 5 Z W 7 B

X | J 9 U T I 82. Each plaintext character is replaced with its coordinates (substitution):

Plaintext M → row D, column D → DD

Plaintext E → row D, column F → DF

Plaintext T → row X, column G → XG

Plaintext A → row D, column G → DG

Plaintext D → row F, column X → FX

Plaintext W → row V, column G → VG

Plaintext N → row F, column F → FF

MEET AT DAWN → DDDFXGDGFXVGFF

3. After substitution, we apply a transposition: the long string of A/D/F/G/V/X symbols is written into rows under a keyword.

For example, if the keyword is SNOWY, we would write:

S N O W Y

_________

D D D F X

G D G F X

V G F FThe letters are then read off column-by-column in the alphabetical order defined by the keyword: columns 2, 3, 1, 4, 5 in this example, to produce the ciphertext:

DDGDGFDGVFFFXX

Significance: ADFGVX combined complex substitution (characters to coordinate pairs) with columnar transposition, creating a cipher that resisted both frequency analysis and pattern recognition.

Downfall: French cryptanalyst Georges Painvin broke it in 1918 by finding repeated patterns that survived the transposition step, demonstrating that even sophisticated combinations could fall to persistent analysis.

Lessons from Classical Cryptography

The evolution of classical ciphers reveals several crucial insights:

Fundamental Limitations

-

Substitution alone preserves statistical patterns that can be exploited through frequency analysis

-

Transposition alone preserves letter content while only scrambling positions

-

Simple combinations can be defeated by attacking each component separately

-

Key repetition in polyalphabetic systems creates exploitable patterns

-

Human-scale operations are inherently limited in the complexity they can achieve

Driving Principles Discovered

Through centuries of failure and improvement, classical cryptography established principles that remain relevant:

-

Hide statistical patterns in the underlying language

-

Avoid repetition that leaks information about keys or methods

-

Combine different techniques to cover each other's weaknesses

-

Use keys that are long and non-repeating when possible

-

Expect that methods will be discovered and build security accordingly

The Need for a Mathematical Foundation

Most importantly, the failure of classical ciphers, even sophisticated ones like ADFGVX, demonstrated that cleverness and complexity alone were insufficient. Cryptography needed a systematic, mathematical approach to:

-

Measure and quantify security rather than just hoping methods would work

-

Understand what properties made ciphers strong or weak

-

Design systems that could provably resist known attacks

-

Evaluate new designs before deploying them in critical applications

These realizations set the stage for the next phase of cryptographic development: mechanized systems that could apply transformations too complex for hand calculation, and eventually the theoretical framework that would put cryptography on solid mathematical foundations.