Part 1: Introduction to Malware and Taxonomy

Part 2: Malware Architecture and Components

Part 3: Delivery and Initial Compromise

Part 4: Social Engineering Attacks

Part 5: Specialized Malware Components

Part 6: Defenses Against Malware

The Human Vulnerability

Technical exploits are powerful tools, but they require finding vulnerabilities in software, developing exploits, and bypassing various security controls. Social engineering takes a different approach: instead of attacking technology, it attacks people.

Social engineering is the manipulation of people into performing actions or divulging information that compromises security. Rather than exploiting a buffer overflow or a misconfigured firewall, social engineering exploits human psychology: trust, curiosity, fear, helpfulness, and the tendency to make quick decisions under pressure.

Consider the statistics: over 90% of successful cyberattacks involve some element of social engineering, most commonly phishing. Meanwhile, zero-day exploits (the most sophisticated technical attacks) are used in a tiny fraction of breaches, primarily by well-funded attackers targeting specific high-value targets.

Why is social engineering so effective? Several factors make humans vulnerable:

Trust by default. Humans are social creatures who generally trust others unless given reason not to. We want to be helpful. When someone asks for assistance, even a stranger, our instinct is often to help rather than to suspect malicious intent.

Cognitive shortcuts. We make hundreds of decisions daily and can't carefully analyze each one. We rely on heuristics and snap judgments. Does this email look legitimate? The logo looks right, the formatting seems professional, and I am expecting a package; it's probably real. This quick decision-making works well most of the time but can be exploited.

Authority compliance. People tend to obey authority figures, even when instructions seem unusual. If someone claiming to be from IT support asks for your password, many people will provide it despite security training explicitly saying never to share passwords.

Emotional manipulation. Fear, urgency, curiosity, and greed all interfere with rational decision-making. When we're scared or excited or under time pressure, we make poor security decisions.

Security fatigue. Constant warnings and security prompts lead to habituation. When every website has cookie warnings, every app wants permissions, and every document triggers security alerts, legitimate warnings blend into background noise.

Perhaps most importantly: humans can't be patched. If a vulnerability is discovered in software, a patch can fix it on millions of systems simultaneously. Human psychology doesn't work that way. Security awareness training helps, but even well-trained individuals make mistakes, especially under pressure or when distracted.

Kevin Mitnick, who conducted numerous social engineering attacks in the 1990s before his arrest and later life as a security consultant, demonstrated repeatedly that human manipulation could bypass even sophisticated technical defenses. His experience showed that tricking someone into giving you their password is often easier than hacking the system technically.

Psychological Principles Attackers Exploit

Effective social engineering isn't random; it leverages specific, well-understood psychological principles. Attackers consciously or unconsciously exploit these cognitive biases and emotional triggers:

Authority

People often obey authority figures without questioning. Stanley Milgram's famous psychology experiments in the 1960s demonstrated that ordinary people would administer what they believed were dangerous electric shocks to others when instructed to do so by an authority figure.

In cybersecurity contexts, attackers impersonate authority figures:

-

"This is IT support calling. We need to verify your account. What's your password?"

-

"I'm calling from corporate security. We've detected suspicious activity on your account."

-

"This is the CEO. I need you to process this wire transfer immediately."

The authority might be technical (IT department), organizational (executives), governmental (IRS, FBI, local police), or institutional (banks, service providers). People are conditioned to comply with legitimate authorities, and attackers exploit this conditioning.

Even when requests seem unusual, authority can override skepticism. An employee might think "this is strange" when the CFO emails asking for wire transfer details, but the thought "I don't want to question the CFO" or "they're probably just in a hurry" leads to compliance.

Urgency and Scarcity

Time pressure interferes with rational decision-making. When we feel rushed, we take shortcuts and skip verification steps we would normally perform.

Attackers create artificial urgency:

-

"Your account will be closed in 24 hours unless you verify your information!"

-

"Limited time offer; act now or miss out!"

-

"Suspicious activity detected; confirm your identity immediately!"

-

"Your password expires in one hour. Click here to reset it now."

Scarcity is related; the fear of missing out (FOMO) motivates quick action without careful consideration:

-

"Only 3 items left in stock!"

-

"This offer expires at midnight!"

-

"First 100 respondents get a free gift card!"

Real service providers rarely create extreme urgency for routine matters. Legitimate password expiration notices give days or weeks of warning. Real security alerts explain how to verify the issue through official channels. But in the moment, with a ticking clock, people don't always think this through.

Trust and Familiarity

We trust familiar entities more than unfamiliar ones. Attackers exploit this by impersonating trusted brands, people, or organizations:

-

Emails appearing to come from Amazon, Microsoft, or major banks

-

Messages that seem to be from colleagues, friends, or family members

-

Websites that look identical to familiar services

The impersonation can be quite sophisticated. Attackers steal logos, copy website designs, and mimic writing styles. They might compromise a real colleague's email account and send messages from that account, making the message genuinely come from a trusted source (though the colleague didn't write it).

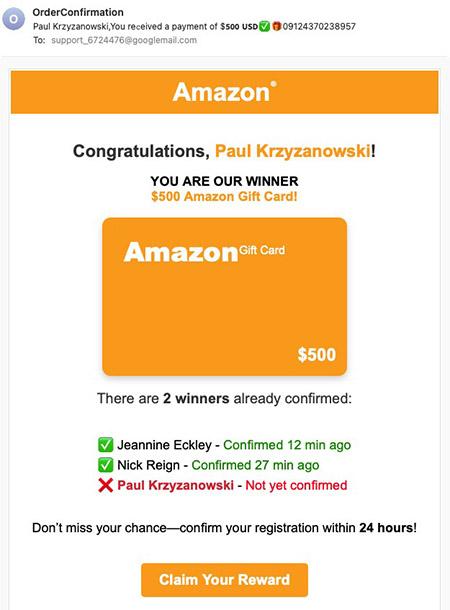

Social proof (the tendency to follow what others are doing) also builds trust. Fake reviews, download counters showing millions of downloads, testimonials from supposed users, and "trending" indicators all suggest that others trust this, so you should too.

Fear

Fear is one of the most powerful emotional triggers. When people are afraid, rational thinking takes a back seat to immediate reaction.

Fear-based attacks include:

-

"Your computer is infected with 5 viruses! Click here to remove them immediately!"

-

"Your account has been compromised! Change your password now!"

-

"Legal action will be taken against you unless you respond within 24 hours!"

-

"IRS: Final Notice; Warrant for your arrest will be issued!"

The fear might be of financial loss, legal consequences, identity theft, public embarrassment, or various other threats. Under stress, people click links they wouldn't normally click, provide information they wouldn't normally provide, and ignore warning signs they would normally notice.

Curiosity

Humans are naturally curious. "I wonder what this is" can override "I probably shouldn't click this."

Curiosity-based lures include:

-

"Photo from last night..." (what photo?)

-

"You won't believe what your coworker said about you..." (what did they say?)

-

"Your package couldn't be delivered" (but I wasn't expecting a package; maybe it's a surprise?)

-

"Salary_Adjustments_Q4.xlsx" (am I getting a raise?)

Subject lines and filenames are crafted to provoke curiosity. Even people who suspect it might be malicious sometimes click anyway, unable to resist finding out.

The USB drop attack mentioned in the previous section exploits curiosity. Finding a USB drive provokes "I wonder what's on this?" followed by "let me plug it in and see."

Greed and Desire

The promise of something valuable (money, gifts, prizes, free access to paid services) motivates people to ignore risks.

Greed-based attacks include:

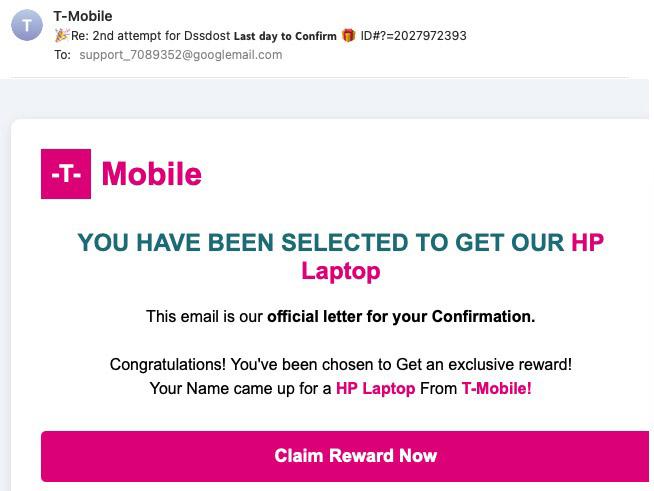

-

"You've won a $500 gift card! Click to claim your prize!"

-

"Congratulations! You're eligible for a tax refund of $847.23!"

-

"Free Netflix/Spotify/Office 365 account; limited time!"

-

"Get rich quick with this one weird trick!"

While these might seem obviously suspicious, they're effective when:

While these might seem obviously suspicious, they're effective when:

-

The offer is plausible (small gift cards are common promotions)

-

It comes at an opportune time (during tax season for refund scams)

-

The victim wants to believe it (confirmation bias)

-

The victim doesn't know the common patterns of scams

Helpfulness and Reciprocity

Most people want to be helpful when someone asks for assistance. Social engineering attacks exploit this prosocial behavior:

-

"I locked my keys in the office. Can you let me in?"

-

"I'm new and can't get the printer to work. Can you help?"

-

"I forgot my password. Can you reset it for me?"

Reciprocity (the human tendency to want to return favors) can also be exploited. An attacker might help someone (holding a door, assisting with a technical issue) and then ask for a "small favor" in return that compromises security.

Consistency and Commitment

Once people commit to something small, they're more likely to comply with larger requests. This is called the foot-in-the-door technique.

An attacker might start with a small, innocuous request: "Hi, I'm doing a survey about office technology usage. Do you use Windows or Mac?" (Most people will answer this harmless question.)

Then escalate: "What version of Windows are you running?" (Still seems harmless.)

Then: "Have you installed the recent security updates?" (Getting more specific but still seems like survey questions.)

Eventually: "I notice you're missing an important security update. I can help you install it; just visit this website and download the patch." (Now the victim is downloading malware, but they've been gradually led to this point through small commitments.)

Combining Multiple Principles

The most effective social engineering attacks combine multiple psychological principles. A phishing email might create urgency ("account will be closed"), leverage authority ("from your bank"), provoke fear ("suspicious activity detected"), and exploit trust (looks exactly like legitimate bank communications).

Defending against social engineering requires recognizing these patterns and consciously applying skepticism even when every instinct says to trust and comply. This is difficult because it requires fighting against fundamental aspects of human psychology.

Phishing: The Foundation

Phishing is the most common form of social engineering attack. The term comes from "fishing": casting out lure and waiting for victims to bite. The "ph" spelling comes from "phone phreaking," early hacker culture slang for telephone system exploitation.

A typical phishing attack works like this:

-

Distribution: Attackers send mass emails (or text messages, social media messages, etc.) to thousands or millions of potential victims

-

Impersonation: Messages appear to come from legitimate sources: banks, service providers, employers, government agencies

-

Urgency: Messages create pressure to act quickly without thinking carefully

-

Call to action: Messages include a link to click or attachment to open

-

Exploitation: Clicking the link leads to a fake website that steals credentials, or the attachment installs malware

Even a very low success rate still results in many victims. If a phishing campaign targets 100,000 people and only 0.1% fall for it, that's still 100 compromised accounts or infected systems.

Common Phishing Lures

Phishing messages use various pretexts to motivate action:

Account verification: "We need to verify your account information. Click here to confirm your identity." This exploits users' fear of losing access to important accounts.

Security alerts: "Unusual activity detected on your account. Click here to review recent logins." This combines fear (someone might be in my account) with the appearance of security consciousness.

Password expiration: "Your password will expire in 24 hours. Click here to reset it now." Many organizations do have password expiration policies, so this seems plausible.

Delivery notifications: "Your package could not be delivered. Click here to reschedule." With the prevalence of online shopping, many people are often expecting packages.

Financial notifications: "You have a pending payment" or "Your invoice is ready" or "Refund available." These exploit both hope (refund!) and fear (unpaid bill!).

Document sharing: "You've received a secure document" or "Shared file from [name]." These seem like normal business communications.

IT requests: "System maintenance required; verify your credentials" or "Security update available." These impersonate legitimate IT communications.

What Happens When You Click

When victims click phishing links, several things might happen:

Credential harvesting: The link leads to a fake login page that looks identical to the legitimate service. Users enter their username and password, which the attacker captures. The page might then redirect to the real service, so victims don't realize they've been compromised. The attacker now has valid credentials they can use immediately or sell.

Malware download: The link triggers a download, perhaps an "urgent security update" or a "document you need to review." The downloaded file is actually malware that installs when opened.

Exploit kit: The link leads to a website hosting an exploit kit. The kit attempts to exploit vulnerabilities in the victim's browser, plugins, or operating system. If successful, malware is installed without the user consciously downloading or running anything.

Further phishing: The link leads to an intermediate phishing page that requests additional information: social security numbers, credit card details, security question answers. Victims who have already clicked through multiple pages may continue providing information.

Ransomware: Some phishing attachments directly deliver ransomware. Opening the attachment triggers encryption of the victim's files.

Phishing Techniques

Attackers use various technical and psychological techniques to make phishing messages appear legitimate:

Email Spoofing

Email protocols (SMTP, Simple Mail Transfer Protocol) were designed in an era when security wasn't a primary concern. By default, there's no strong verification that the email actually comes from the claimed sender.

An attacker can configure their email server to send messages claiming to be from any address. The recipient sees the claimed address as the sender, even though the email came from the attacker's server. Email clients display the sender address prominently, and most users don't examine email headers to verify the actual sending server.

Modern email security technologies like SPF (Sender Policy Framework), DKIM (DomainKeys Identified Mail), and DMARC (Domain-based Message Authentication, Reporting, and Conformance) help detect spoofed emails, but implementation is incomplete. Not all organizations use these technologies, and not all email providers enforce them strictly. We will look at these techniques when we cover defenses against malware.

Domain Lookalikes

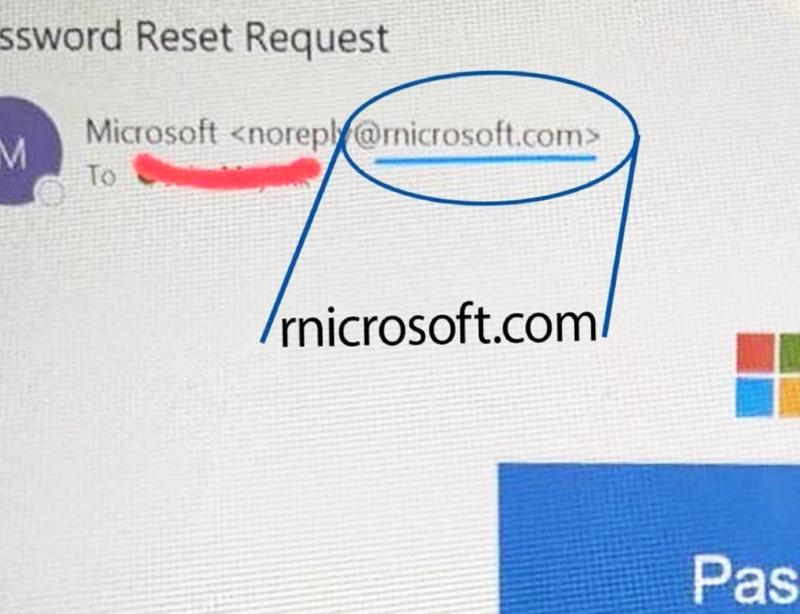

Attackers register domain names that look similar to legitimate domains:

Typosquatting: paypa1.com (number 1 instead of letter l), micros0ft.com (zero instead of o), gooogle.com (extra o)

Homograph attacks: Using Unicode characters that look like Latin letters but are actually from different alphabets. The Cyrillic letter 'о' looks identical to the Latin letter 'o', so one version of paypal.com uses Latin letters while another uses Cyrillic 'о' characters, making them different domains that look the same. Modern browsers try to detect and warn about such domains, but techniques to bypass detection continue to evolve.

Combosquatting: Adding words to legitimate domains: paypal-security.com, microsoftonline-support.com, apple-icloud-verify.com. These look more legitimate than simple typos because they include security-related terms.

Wrong top-level domain: Using .net instead of .com, or newer TLDs: paypal.co instead of paypal.com, microsoft.support instead of microsoft.com

Users often don't look carefully at URLs, especially on mobile devices where the address bar is small or hidden. A quick glance might not catch the subtle difference between the legitimate and malicious domain.

Visual Deception

Phishing emails often look remarkably similar to legitimate communications:

Stolen branding: Attackers copy logos, color schemes, fonts, and layouts from legitimate organizations. Email templates are usually not secret; attackers can simply receive a legitimate email from the organization and copy its design.

Professional formatting: Well-designed emails with proper grammar and professional language appear more trustworthy than poorly written ones. While phishing emails were once notable for poor English and obvious errors, modern phishing campaigns often have excellent writing quality.

Real signatures: Attackers might copy email signatures from legitimate emails, including names, titles, phone numbers, and even actual photos of real employees.

Legitimate surrounding content: The phishing email might include real information about the victim (their name, account number, or recent transaction) to appear more legitimate. This information might come from data breaches, public records, or previous reconnaissance.

Link Obfuscation

Phishing emails hide where links actually go:

Display text differs from URL: HTML email allows display text to differ from the actual link destination. The victim sees one address displayed as text but clicking goes to a completely different address. Many users don't hover over links to check the actual destination before clicking.

URL shorteners: Services like bit.ly, tinyurl.com, and similar URL shorteners hide the real destination. While these have legitimate uses, they're also abused by attackers to conceal malicious URLs.

Redirects: The initial link might go to a legitimate but compromised website that immediately redirects to the malicious site. This makes the link appear safer in email security scans.

Legitimate-looking long URLs: Attackers create URLs with multiple subdomains and paths that look plausible. A URL might include "paypal" somewhere in the middle of a long domain name, and cursory examination might focus on that familiar word without noticing the actual domain structure.

Attachment Tricks

When phishing uses attachments instead of links:

Double extensions: Windows hides known file extensions by default. A file named document.pdf.exe displays as only document.pdf, so users see what appears to be a safe PDF file when it's actually an executable.

Archive files: ZIP or RAR archives containing malware. Email scanners might not scan inside archives, especially password-protected ones. The phishing email might say "The attached file is password-protected for security. The password is: 1234" which sounds reasonable but actually just bypasses scanning.

Macro-enabled documents: As discussed earlier, Office documents with macros that execute malicious code when enabled.

Icons: Using PDF, Word, or Excel icons for executable files. Users often judge files by their icon, assuming a file with a PDF icon is a PDF.

Spear Phishing: Targeted Attacks

While mass phishing casts a wide net, hoping for any victims, spear phishing targets specific individuals or organizations with customized messages.

The key differences:

Research: Attackers research their targets beforehand. They gather information from LinkedIn (job titles, responsibilities, projects), social media (interests, connections, recent activities), company websites (org charts, press releases, technologies used), and other sources.

Personalization: Messages reference specific people, projects, or contexts relevant to the target. Instead of generic "Dear customer," they use real names and mention real situations.

Context: The message fits naturally into the target's work or life. A phishing email to a finance employee might reference vendor payments. One to a developer might reference a code repository. One to an HR person might reference applicant materials.

Plausibility: Because the message is customized and researched, it seems much more plausible. Recipients are far more likely to click links or open attachments that appear relevant to their specific situation.

Example of generic phishing:

From: security@paypal.com

Subject: Verify Your Account

Dear PayPal User,

Your account has been temporarily limited. Click here to verify

your information and restore full access.

Thank you,

PayPal Security TeamExample of spear phishing:

From: mary.johnson@company.com

Subject: Q4 Budget Review - Chicago Office

Hi John,

Can you review the attached budget projections for our Chicago office

expansion we discussed last week? I'd like your input before tomorrow's

executive meeting with Tom and Sarah.

Thanks,

Mary

[Attachment: Q4_Budget_Chicago_Office.xlsx]The second message is far more convincing because it:

-

Uses real names (John, Mary, Tom, Sarah)

-

References a real project (Chicago office expansion)

-

Mentions a real event (tomorrow's meeting)

-

Comes from an internal email address

-

Uses natural business language

How did the attacker get this information? Perhaps from:

-

LinkedIn showing these people work at the same company in relevant roles

-

Company press releases mentioning the Chicago expansion

-

Previous emails stolen from a compromised account

-

Social media posts about the project

The attachment might be a malicious Excel file with macros, or the sender address might be spoofed (not really from Mary), or Mary's account might actually be compromised.

The Business Email Compromise Variant

A particularly costly form of spear phishing is Business Email Compromise targeting financial transactions.

CEO Fraud involves an attacker impersonating a CEO or other executive and emailing the finance department requesting an urgent wire transfer:

From: ceo@company.com

Subject: Urgent - Wire Transfer Needed

I'm in a meeting with potential acquisition targets and need you

to wire $250,000 to the account below.

This is time-sensitive as the deal needs to close today.

Please confirm once the transfer

is complete.

[Account details]The urgency, authority (CEO), and plausibility (companies do make time-sensitive payments for acquisitions) combine to motivate compliance. The finance employee might not follow normal verification procedures because the request comes from the CEO.

Vendor Email Compromise involves attackers compromising a vendor's email account or impersonating them:

From: accounts@vendorcompany.com

Subject: Updated Payment Information

Hi,

We've recently changed banks and need to update our payment information in

your system. Please use the following account details for future payments:

[Attacker's account details]

Thank you,

Vendor Accounts Payable TeamThe company pays legitimate invoices to the attacker's account instead of the real vendor. This might not be discovered until the real vendor complains about non-payment weeks or months later.

Business Email Compromise attacks have caused massive financial losses. The FBI's Internet Crime Complaint Center reported over $43 billion in losses to BEC from 2016-2021. Individual attacks have stolen tens of millions of dollars from single organizations.

Real examples include:

-

Toyota Boshoku (automotive supplier) lost $37 million in 2019 to BEC fraud

-

Crelan Bank (Belgian bank) lost €70 million in 2016

-

Austrian aerospace company FACC lost €42 million in 2016

These attacks succeed despite organizations having financial controls because they exploit trust and authority. An employee who receives what appears to be a legitimate request from the CEO may bypass normal approval processes.

Whaling: Targeting Executives

Whaling is spear phishing that specifically targets C-level executives: CEOs, CFOs, CIOs, and other high-ranking individuals.

Why target executives?

Access to sensitive information: Executives have access to strategic plans, financial data, M&A information, and other high-value intelligence.

Financial authority: They can approve large transactions, authorize payments, and make binding commitments.

Can bypass controls: Executives often have the authority to override security policies or demand exceptions.

Valuable credentials: Access to an executive's email or systems provides entry to restricted information and the ability to impersonate them for further attacks.

Whaling attacks often use different lures than typical phishing:

Legal threats: "You've been named in a lawsuit. Attached is the complaint." Executives are more likely to be involved in legal matters and will want to review documents immediately.

Regulatory compliance: "SEC investigation notice" or "IRS audit notification." Executives must respond to regulatory matters.

Board communications: "Confidential board meeting materials" or messages appearing to come from board members.

High-level business opportunities: "Confidential acquisition opportunity" or "Investment proposal."

Example:

From: law-firm@legal-domain.com

Subject: Legal Notice - Immediate Attention Required

Mr. Johnson,

Your company has been named as a defendant in a class-action lawsuit regarding data privacy violations. Please review the attached complaint and contact our office within 48 hours.

Failure to respond may result in default judgment.

[Attachment: Legal_Complaint_Case_6789.pdf]

(Actually: malware)Executives are often more vulnerable to social engineering than might be expected:

Less security training: Some organizations exempt executives from mandatory security training.

Use personal devices: Executives may use personal phones or computers that lack corporate security controls.

Delegate security: Assistants handle email filtering and preliminary screening, so executives may not develop the same skepticism as other employees.

Time pressure: Constant meetings and travel mean executives make quick decisions without careful review.

Authority mindset: Because executives are accustomed to giving rather than taking orders, they may not expect to be targeted by social engineering.

Typosquatting and Similar Domains

Typosquatting exploits the human tendency to make errors. Attackers register domain names that are common misspellings of legitimate domains. The goals may be targeting one of two errors:

-

Users may make mistakes while typing: pressing adjacent keys, accidentally skipping a character, or typing an extra character.

-

Users may misread domain names and be fooled by lookalike characters or not know what that true domain should be (is it

verizon-wireless.comorverizonwireless.com?).

Common typosquatting patterns:

Character substitution:

-

g00gle.com(zeros instead of letter o) -

paypa1.com(number one instead of letter l) -

micros0ft.com(zero instead of o)

Omitted characters:

-

gogle.com(missing one o) -

facbook.com(missing e) -

amazn.com(missing o)

Extra characters:

-

gooogle.com(extra o) -

payppal.com(extra p)

Adjacent keys:

-

goohle.com(h is next to g on keyboard) -

yahooo.com(o is repeated, easy to type accidentally)

Wrong TLD:

-

google.netinstead ofgoogle.com -

microsoft.orginstead ofmicrosoft.com

Combosquatting:

-

google-security.com -

microsoft-support.net -

apple-verify.com

How Typosquatting is Used

Credential harvesting: The fake domain hosts a phishing page that looks identical to the real service. Users who mistype the URL and don't notice arrive at the fake page. They enter credentials, which the attacker captures. The page might then redirect to the real site, so users don't realize anything happened.

Malware distribution: The fake domain hosts fake download pages. Users searching for software downloads might land on the typosquatting domain and download malware thinking it's legitimate software.

Advertising revenue: Some typosquatted domains simply show advertisements. The typosquatter earns money from clicks while users look for the site they actually wanted.

Phishing infrastructure: The similar domain is used in phishing emails. An email from security@paypal-verify.com looks more legitimate than one from security@randomdomain.com, even though neither is actually PayPal.

Package Name Typosquatting

Typosquatting isn't limited to web domains. Package repositories for software development have similar issues:

npm (JavaScript):

-

cross-env(legitimate) vs.crossenv(malicious) -

event-stream(legitimate) vs.event-streem(malicious)

PyPI (Python):

-

requests(legitimate) vs.requsets(malicious) -

urllib3(legitimate) vs.urlib3(malicious)

Developers who mistype package names in their dependency specifications might install malicious packages. These malicious packages often contain:

-

Data exfiltration code that steals environment variables, credentials, or source code

-

Backdoors that provide access to the developer's system

-

Supply chain attacks that inject malware into the developer's application

The impact can be severe. In March 2025, a typosquatting campaign targeted the Go ecosystem with at least seven malicious packages designed to install hidden loader malware, primarily targeting Linux and macOS systems in the financial sector.

In November 2024, a campaign targeted hundreds of popular JavaScript libraries, focusing on cryptocurrency-related packages with tens of millions of weekly downloads.

Defending Against Typosquatting

Bookmarks: Instead of typing URLs, use bookmarks for frequently visited sites. This eliminates typing errors.

Password managers: These autofill credentials only on the correct domain. If you type paypa1.com instead of paypal.com, your password manager won't offer to fill in your PayPal password, which signals something is wrong.

Careful verification: Look carefully at URLs before entering sensitive information. Check the domain name specifically, not just that "paypal" appears somewhere in the URL.

Package verification: In software development, carefully check package names before adding dependencies. Use package lock files that specify exact versions. Review packages before first use.

Corporate DNS filtering: Organizations can block known typosquatting domains at the DNS level.

Defensive registration: Large companies often register common typos of their domains defensively to prevent abuse. For instance, Google owns gogle.com, ggoogle.com, and many similar variations.

Vishing and Smishing

Social engineering isn't limited to email. Voice phishing (vishing) and SMS phishing (smishing) use phone calls and text messages respectively.

Vishing: Voice Phishing

Vishing attacks use phone calls to manipulate victims. Common scenarios:

Tech support scams: Attackers call claiming to be from Microsoft, Apple, or other tech companies:

"Hello, this is Microsoft Security. We've detected viruses on your

computer. I need remote access to fix the problem. Please go to

TeamViewer.com and download the remote access software..."The victim, worried about the supposed virus, downloads legitimate remote access software and gives the attacker control of their computer. The attacker then might:

-

Install actual malware

-

Steal files and data

-

Lock the system and demand payment

-

Steal banking credentials visible in saved passwords or browser history

IRS/Tax scams: Attackers claim to be from the IRS or equivalent tax authority:

"This is the IRS calling about your tax return. There's a problem

that requires immediate payment. If you don't pay within 24 hours,

a warrant will be issued for your arrest."The fear of legal consequences motivates victims to make payments, often via gift cards (which are untraceable and non-refundable).

Bank verification: Attackers call claiming to be from the victim's bank:

"We've detected unusual activity on your account. Can you verify

your account number and the last four digits of your card so I

can look up your account?"Once they have enough information, they can access the account or use the information for identity theft.

Corporate impersonation: In targeted attacks, callers might claim to be from the company's IT department:

"This is IT. We're troubleshooting network issues and need your

login credentials to test your account's access."A 2020 trend involved hackers-for-hire offering vishing services specifically targeting work-from-home employees. Attackers would:

-

Create company-branded phishing pages for major corporations

-

Call employees working at home, claiming to be from IT

-

Explain they're troubleshooting VPN issues

-

Walk the employee through entering credentials on the phishing page

-

Capture the credentials for later use or immediate sale

Some sophisticated vishing campaigns create fake LinkedIn profiles for supposed IT staff members, adding legitimacy to the calls.

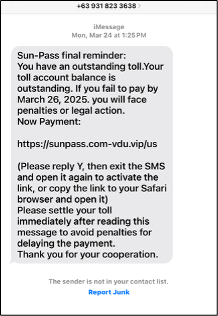

Smishing: SMS Phishing

Text message phishing has grown significantly with the ubiquity of smartphones. Smishing is effective because:

Higher trust: People often trust text messages more than emails, especially if they appear to come from known contacts or familiar services.

Small screens: Mobile devices have limited screen space, making it harder to verify URLs or notice suspicious details.

Less filtering: SMS has less sophisticated spam filtering than email.

Immediacy: People check texts quickly and may respond without thinking carefully.

Common smishing examples:

Package delivery:

Your package couldn't be delivered. Reschedule delivery:

[shorturl.com/abc123]With widespread online shopping, many people are frequently expecting packages.

Account alerts:

Bank Alert: Unusual activity detected on your account.

Verify now: [link]Toll/traffic violations:

This scam surged in 2025, exploiting automated toll collection systems.

COVID-19 and health-related:

COVID-19 exposure notification. View details: [link]These exploited pandemic concerns and were widespread in 2020-2022.

Prize notifications:

Congratulations! You've won a $500 Walmart gift card.

Claim here: [link]SMS Two-Factor Authentication Bypass

Smishing can defeat two-factor authentication through real-time relay attacks:

-

Attacker phishes username and password through a fake login page

-

Attacker uses those credentials to log into the real service

-

Real service sends 2FA code to victim's phone

-

Attacker's phishing page prompts victim to enter the 2FA code they just received

-

Victim enters the code on the phishing page

-

Attacker receives the code and enters it on the real service

-

Attacker is now fully logged in with valid 2FA

This happens in real-time, within the brief validity period of 2FA codes (typically 30-60 seconds). From the victim's perspective, they're just going through normal login with 2FA. They don't realize they're providing the code to an attacker who's simultaneously logging into their real account.

This is why security experts recommend app-based 2FA (Google Authenticator, Authy) or hardware tokens (YubiKey) rather than SMS-based 2FA. SMS is vulnerable to interception, SIM swapping attacks, and these real-time relay attacks.

Social Media Engineering

Social media platforms provide rich sources of information for social engineering and can themselves be attack vectors.

Information Gathering

Attackers use social media for reconnaissance:

LinkedIn reveals:

-

Job titles and responsibilities

-

Current employer and department

-

Work history and skills

-

Professional connections (who knows whom)

-

Recent activities and posts about projects

Facebook and Instagram reveal:

-

Personal interests and hobbies

-

Family and friend relationships

-

Locations (check-ins, photos with geotags)

-

Travel plans ("Excited for my trip to Paris next week!")

-

Life events (new jobs, moves, purchases)

Twitter/X reveals:

-

Opinions and interests

-

Real-time activities

-

Professional conferences and events attended

-

Technology preferences and complaints

This information enables highly targeted spear phishing. Knowing someone is traveling next week allows an attacker to send "Your flight has been canceled" phishing emails at the right time. Knowing someone is interested in photography enables trojan horses disguised as photo editing software.

Direct Attacks via Social Media

Social media platforms themselves can be attack vectors:

Fake connection requests: On LinkedIn, attackers send connection requests that appear to be from industry colleagues, recruiters, or potential business partners. Once connected, they can:

-

Send malicious links in direct messages

-

Gather more detailed information about the victim's network

-

Use the connection for later spear phishing ("Your LinkedIn connection [name] recommended you for...")

Compromised accounts: When an attacker compromises someone's social media account, they can message all that person's contacts with malicious links. These messages are highly trusted because they come from a real friend or colleague's account.

Malicious links in posts:

"Your friend tagged you in a photo! Click to see: [link]"Fake job offers: Particularly on LinkedIn, attackers post fake job opportunities:

"Hi! I noticed your profile and think you'd be perfect for a

role at our company. Here's the job description: [link to

malicious PDF]"Developers and tech professionals are often targeted with offers to review code or contribute to open-source projects, where the "code" is actually malware.

Brand impersonation: Fake customer support accounts impersonate legitimate companies. A user posts a complaint, and a fake support account responds, asking them to send sensitive information via direct message.

Influence Operations

Some social engineering operates at scale through coordinated campaigns:

Fake personas: Attackers create entire fake identities on social media. These accounts post regularly, build follower counts, and establish apparent legitimacy over months or years. Eventually, they're used to:

-

Distribute malicious links that seem to come from established, trusted accounts

-

Gather intelligence about potential targets

-

Build relationships with specific individuals for targeted attacks

Long-term relationship building: In sophisticated attacks, particularly those carried out by nation-state actors, social media personas might interact with targets over extended periods, building trust before ultimately delivering malicious content.

In-Person Social Engineering

While much social engineering occurs online, physical access attacks remain effective.

Tailgating and Piggybacking

Tailgating (or piggybacking) involves following an authorized person through a secured door without proper authentication.

Common scenarios:

-

Carrying boxes or coffee with both hands, appearing to need help

-

Wearing delivery company uniforms

-

Dressing as contractors or maintenance workers

-

Simply walking confidently behind someone and catching the door before it closes

People are naturally inclined to hold doors open for others, especially when someone appears to need help or looks like they belong. Corporate culture often emphasizes courtesy and teamwork, which attackers exploit.

Impersonation

Physical impersonation uses costumes, fake ID badges, and confident behavior:

Uniforms: Delivery service uniforms (FedEx, UPS), maintenance worker outfits, or security guard uniforms grant psychological authority. People don't question someone who looks like they belong there.

ID badges: Fake employee badges can be created easily with printed photos and lanyards. From a distance, or with a quick glance, they appear legitimate.

Props: Carrying a ladder, toolbox, or maintenance equipment makes someone appear to be working legitimately. Carrying a clipboard or tablet in a rugged case and looking purposeful is remarkably effective; people assume someone with a clipboard has authorization for whatever they're doing.

Dumpster Diving

Dumpster diving involves searching through trash for sensitive information:

-

Documents containing passwords or system information

-

Sticky notes with credentials written on them

-

Discarded hard drives that haven't been properly wiped

-

Organizational charts and employee directories

-

Failed printouts containing confidential information

While companies often have document shredding policies, enforcement is inconsistent. A single document carelessly thrown away instead of shredded might contain enough information for a targeted attack.

Shoulder Surfing

Shoulder surfing means observing someone entering passwords, PINs, or viewing sensitive information:

-

Looking over someone's shoulder on public transit

-

Watching from a distance with binoculars or telephoto lenses

-

Observing in coffee shops or airports where people work on laptops

-

Standing behind someone at an ATM

Privacy screens that limit viewing angles help defend against shoulder surfing, as does being aware of surroundings when entering sensitive information.

USB Drop Attacks

As mentioned earlier, leaving infected USB drives for people to find exploits curiosity. Variations include:

Labeled drives: Labels like "Executive Salary Information," "Confidential - Attorney Work Product," or "Private" increase the likelihood someone will plug in the drive out of curiosity.

Targeted drops: Leaving drives in locations where specific people will find them: near their office, in their department's area, or in parking spaces assigned to specific individuals.

Multiple drops: Leaving many drives increases the odds that someone will plug one in. Even if 95% of people don't take the drive or are suspicious, 5% is often enough.

Sidebar: A Penetration Test Story

Security consultant Bob has permission to test a company's physical security. He dresses as an IT technician: khaki pants, polo shirt with a fake company logo, and carries a laptop bag and clipboard. He arrives at the building's main entrance during the morning rush when employees are streaming in.

Bob waits for someone to badge through the door, then walks confidently behind them carrying his laptop bag. The employee holds the door open for him. "Thanks!" Bob says cheerfully. Now he's inside the secured facility.

Bob walks through the office building purposefully, clipboard in hand, occasionally stopping to "check" something on his clipboard and look at ceiling tiles or network jacks. Nobody questions him. People assume someone with a clipboard who looks like they're inspecting things has authorization to be there.

He finds an empty conference room and plugs a small device into an available network port. This device creates a wireless network that Bob can access from outside the building, giving him network access as if he were physically inside.

Bob then walks to the server room. The door is locked with a keycard reader. He waits nearby, clipboard in hand, appearing to be inspecting a network panel. When an employee badges into the server room, Bob follows them in: "Thanks, I'm just checking some connections in here."

The employee doesn't question it; Bob looks like IT staff, carries appropriate props, and acts like he belongs. Once inside, Bob could plug additional devices into network equipment, copy data, or install malware.

Total time from entering the building to having full network access: about 30 minutes. Bob never broke a lock, defeated any technological control, or did anything overtly suspicious. He simply looked like he belonged and exploited people's natural helpfulness and reluctance to question someone who appears to have authority.

The company learned important lessons about their physical security, implementing policies requiring all visitors to be escorted, verification of anyone accessing sensitive areas, and training employees that it's okay to politely ask "Can I help you find someone?" when encountering unfamiliar people.

Scareware: Social Engineering as the Attack

Sometimes social engineering isn't the delivery mechanism for malware; it is the attack itself.

Scareware uses fake warnings to scare users into paying money or taking harmful actions. Unlike trojans that might actually install malware, scareware often has no real technical component; it's pure psychological manipulation.

Fake Virus Warnings

A user browses a website and suddenly sees:

[WARNING]

Your computer is infected with 5 viruses!

Your files are at risk!

Click here to remove threats immediately!The pop-up might show fake "system scans" with scrolling lists of supposed threats. A countdown timer creates urgency: "Viruses will activate in 02:47." The design might mimic legitimate antivirus software.

What happens if the user clicks:

Scenario 1: They're directed to pay for fake "antivirus software." They purchase a useless or nonexistent product. Their credit card information is stolen.

Scenario 2: They download software that is actually malware, making the fake warning become reality.

Scenario 3: They're locked into a loop of increasingly urgent warnings designed to panic them into calling a phone number for "tech support."

Tech Support Scams

Tech support scams often begin with scareware warnings but escalate to phone calls:

-

User sees fake warning on their computer

-

Warning says "Call Tech Support: 1-800-XXX-XXXX" and makes the browser difficult to close

-

Panicked user calls the number

-

"Tech support" answers and asks to remote into their computer

-

User downloads legitimate remote access software (TeamViewer, AnyDesk, etc.)

-

Scammer takes control and performs theater: opening system logs, pointing to normal entries and claiming they're viruses

-

Scammer demands hundreds of dollars to "fix" the nonexistent problem

-

Alternatively, scammer installs actual malware, steals data, or encrypts files and demands ransom

The entire premise is false; there was never any virus, but the fear and urgency led the user through a series of increasingly harmful actions.

Fake Legal Threats

Some scareware uses legal fear instead of virus fear:

[WARNING] ILLEGAL ACTIVITY DETECTED

Your Windows license is invalid!

Pirated software detected!

Law enforcement has been notified!Or:

FBI - COMPUTER HAS BEEN LOCKED

Your computer has been locked due to viewing illegal content.

You must pay a fine of $500 to unlock your computer.These particularly target less technical users who might believe that law enforcement could remotely lock their computer or that their legitimate software license could suddenly become "invalid."

Why Scareware Works

Scareware is effective because:

Fear overrides logic. When scared, people act impulsively rather than rationally. They don't stop to think "can a website really detect viruses on my computer?" or "would the FBI really lock my computer with a web page?"

Appears urgent. Countdown timers, flashing warnings, and alarm sounds create pressure to act immediately without careful thought.

Exploits lack of technical knowledge. Users who don't understand how computers and security actually work are more susceptible to technical-sounding claims.

Uses authority. References to Microsoft, FBI, or law enforcement leverage authority compliance.

Browser hijacking. Some scareware makes the browser difficult to close, entering fullscreen mode or opening multiple windows. Users may panic and comply just to make it stop.

Defending against scareware requires recognizing that:

-

Legitimate antivirus software doesn't pop up in web browsers from random websites

-

Microsoft and other OS vendors don't scan your computer through your web browser

-

Law enforcement doesn't lock computers with pop-up windows

-

If your computer were actually compromised, the attackers wouldn't alert you with warnings

The best response is to close the browser (using Task Manager if necessary) and run a legitimate antivirus scan from known security software.

Recognizing Social Engineering

Defending against social engineering requires developing skepticism and learning to recognize common patterns.

Red Flags in Messages

Messages that should trigger suspicion:

Language and tone:

-

Urgent or threatening language ("Act now!" "Within 24 hours!" "Final notice!")

-

Too good to be true offers ("You've won!" "Free money!" "One weird trick!")

-

Generic greetings ("Dear user," "Dear customer," "Valued member")

-

Requests for sensitive information (passwords, full account numbers, social security numbers)

-

Spelling and grammar errors (though modern attacks often have perfect grammar)

Technical indicators:

-

Mismatched URLs (display text shows one URL, actual link goes elsewhere)

-

Unusual sender addresses (close but not quite right)

-

Unexpected attachments, especially with double extensions

-

Links to URL shorteners or suspicious domains

Contextual anomalies:

-

Unexpected messages about services you don't use

-

Requests that don't match normal procedures

-

Communications about accounts you don't have

-

Messages that arrive at suspicious times

Behavioral Red Flags

Requests that should raise concerns:

Breaking policy:

-

Asking you to violate security policies

-

Requesting you skip normal procedures

-

Suggesting you don't tell anyone about the request

Pressure tactics:

-

Extreme urgency without clear justification

-

Appeals to authority to bypass verification

-

Requests to keep something secret

-

Threats or intimidation

Unusual requests:

-

People asking for information they should already have

-

Requests from "familiar" people that don't match their normal communication style

-

Technical requests from non-technical people or vice versa

Trust But Verify

The most important defense is the principle of trust but verify: independently verify requests through separate communication channels before complying.

If you receive an email asking you to reset your password:

-

Don't click the link in the email

-

Go directly to the website by typing the URL yourself or using a bookmark

-

Check if there's actually a problem with your account

If the "CEO" emails requesting an urgent wire transfer:

-

Don't reply to the email

-

Call the CEO's office directly using a number you look up independently

-

Verify the request through established channels

If someone calls claiming to be from tech support:

-

Don't give them remote access

-

Hang up and call your IT department's known number

-

Report the suspicious call

If a colleague sends an unexpected attachment:

-

Don't open it immediately

-

Contact them through a different method (call, in-person) to verify they sent it

-

Be suspicious if their communication style seems off

Technical Defenses

While social engineering attacks humans rather than technology, technical controls provide layers of protection:

Email filtering: Advanced email security systems can detect phishing based on sender reputation, known malicious URLs, and content analysis.

Anti-phishing browser extensions: Tools like Microsoft Defender SmartScreen or Google Safe Browsing warn when visiting known malicious sites.

Email authentication: SPF, DKIM, and DMARC help verify that emails actually come from claimed senders.

Multi-factor authentication (MFA): Even if attackers steal passwords through phishing, MFA prevents account access without the second factor. However, as noted, SMS-based MFA can be bypassed through real-time attacks.

Password managers: These autofill credentials only on legitimate sites, providing a strong signal when something is wrong (if your bank password doesn't autofill, you might not be on your bank's actual website).

Network monitoring: Organizations can detect suspicious patterns like many employees suddenly visiting the same unusual domain.

Sandboxing: Email attachments and downloads can be opened in isolated environments where they can't cause harm.

User reporting: Easy mechanisms for employees to report suspicious emails help security teams identify and block phishing campaigns.

Human Defenses: Security Awareness

Technical controls help, but humans remain the ultimate defense against social engineering. Effective security awareness requires:

Regular training: Not just annual compliance training, but ongoing education about current threats.

Phishing simulations: Organizations send fake phishing emails to employees to test awareness and identify who needs additional training. When someone clicks, they're immediately shown educational content explaining what signals they missed.

Positive security culture: Creating an environment where reporting suspicious messages is encouraged and asking questions is normal rather than embarrassing.

Realistic scenarios: Training that uses actual examples employees might encounter, not just obvious phishing.

Just-in-time learning: Education delivered when it's relevant; for instance, explaining a social engineering technique right after someone encounters it.

Leadership participation: When executives participate in security training and follow policies, it sets expectations for the entire organization.

Most importantly, organizations should create a culture where it's not just acceptable but expected to verify unusual requests, even from authority figures. An employee who calls the CEO's office to verify an urgent wire transfer request shouldn't feel they're being overly cautious; they're following best practices.

The Scale of Social Engineering

The prevalence and impact of social engineering is staggering. Research and incident reports show that phishing and social engineering remain the primary initial access vector for cyberattacks. Over 90% of successful breaches involve some form of social engineering, most commonly phishing email.

Studies on phishing effectiveness show concerning patterns. A significant percentage of phishing emails are opened by recipients, and a portion of those who open phishing emails click on malicious links or attachments. While exact percentages vary by study and target population, the success rates are high enough that even sophisticated organizations with security awareness training experience successful phishing attacks.

Security awareness training has been shown to reduce susceptibility to phishing. Organizations that implement regular training and phishing simulations see substantial improvements in employee behavior. Multi-factor authentication blocks the vast majority of automated account compromise attempts, even when credentials are stolen through phishing. Organizations with strong security cultures where employees feel comfortable reporting suspicious messages detect and respond to phishing campaigns significantly faster than those without such cultures.

The data illustrates why social engineering is the primary attack vector. Technical exploits are expensive, require significant skill, and may not work against all targets. Social engineering is cheap, requires less technical sophistication, and works against even well-defended organizations if the right person is targeted with the right message at the right time.

The fact that over 90% of cyberattacks involve phishing deserves emphasis. This means that organizations could prevent the vast majority of breaches by effectively addressing social engineering. No amount of firewall rules, antivirus software, or intrusion detection will stop an attacker who tricks a user into voluntarily providing credentials or downloading malware.

This is why the next major frontier in security isn't better technical controls; it's better human defenses. Organizations are investing heavily in security awareness training, phishing simulation, and creating cultures where security is everyone's responsibility rather than just the IT department's concern.

In the next part, we'll examine specialized malware components: keyloggers, bots and botnets, backdoors, and rootkits. We'll understand how these pieces fit into the complete picture of modern malware operations.